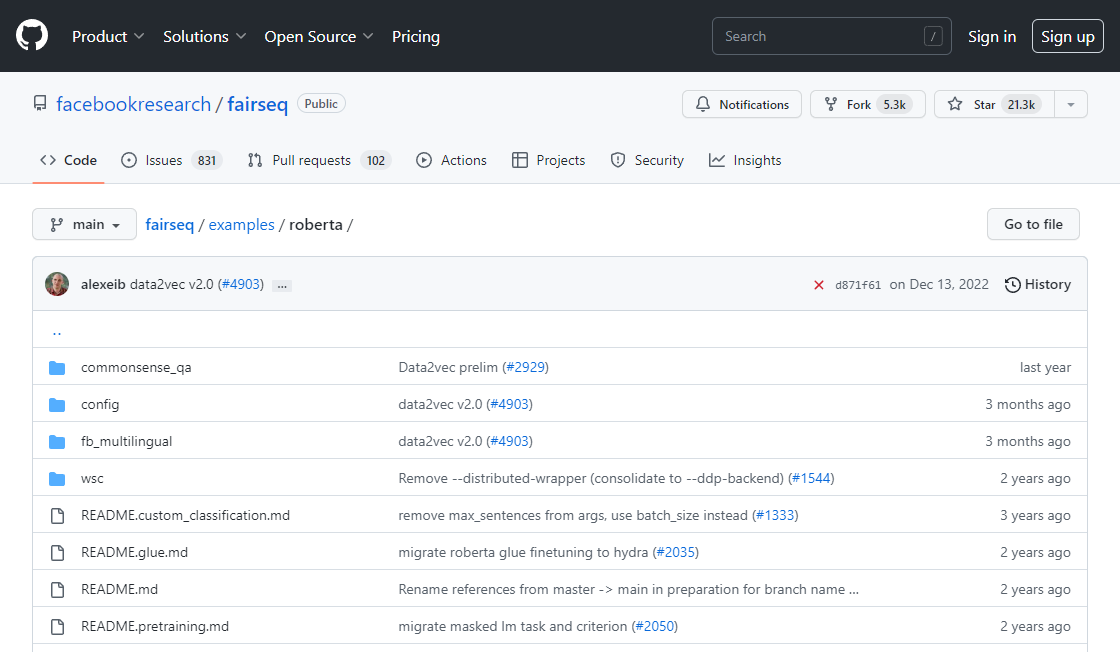

RoBERTa

RoBERTa (Robustly Optimized BERT Pretraining Approach) is an advanced version of BERT with improved training techniques.

Bert Vs Water Cooler Trivia Participants

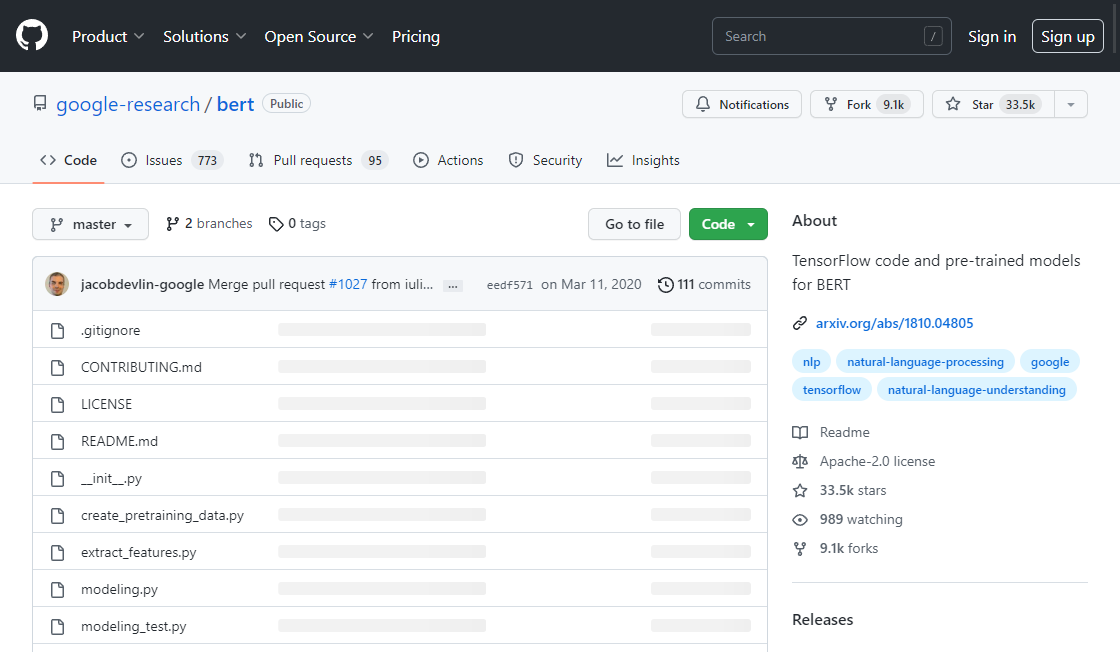

BERT is a natural language processing pre-training approach that can be used to create state-of-the-art models for a wide range of tasks.

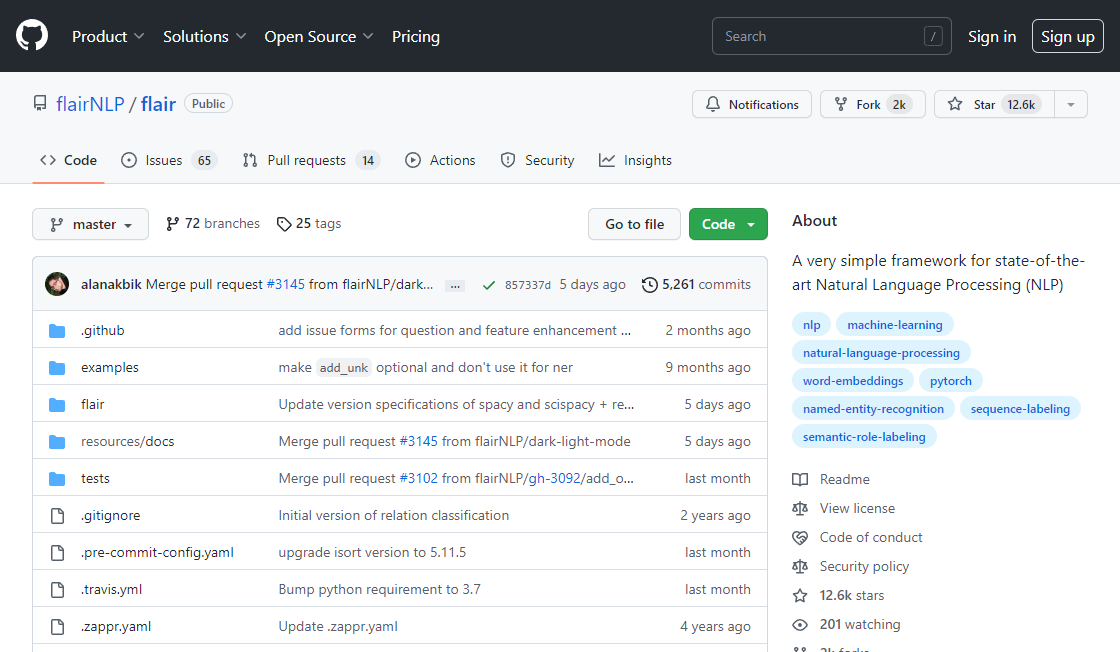

Flair NLP

Flair NLP is a lightweight library for natural language processing (NLP) in Python. It provides an easy-to-use interface to state-of-the-art NLP models, such as BERT and ELMo, that can be used for text classification, named entity recognition, part-of-speech tagging and more.

Google RT-1

RT-1: Robotics Transformer for real-world control at scale – Google AI Blog

Google BERT

Open Sourcing BERT: State-of-the-Art Pre-training for Natural Language Processing – Google AI Blog

DistilBERT

A a distilled version of BERT: smaller, faster, cheaper and lighter

TOP