Hadoop In 5 Minutes | What Is Hadoop? | Introduction To Hadoop | Hadoop Explained |Simplilearn

YouTubeApache Spark with Hadoop | What is Spark and why it is used? | Big Data Hadoop Tools Explained

YouTubeIntroducing Apache Storm | Real-time data computation system | Big Data Hadoop Full Course

YouTubeLucy is a cutting-edge natural language processing (NLP) and machine learning (ML) platform that enables developers to easily interpret text data. This state-of-the-art technology has been designed with the aim of making sense of various forms of text, including social media updates, customer feedback, and product reviews. Lucy's sophisticated algorithms and intuitive interface make it an indispensable tool for businesses looking to gain insights from their data in a quick and efficient manner. Developers can leverage Lucy's powerful features to enhance their applications, improve customer experiences, and drive business growth.

Lexalytics Salience is a comprehensive suite of natural language processing tools that are developed to analyze and extract meaning from textual data. With advanced features like sentiment analysis, entity extraction, and other linguistic analytics, Salience provides businesses with a powerful mechanism to derive valuable insights from vast amounts of unstructured data. By leveraging Salience's capabilities, companies can make informed decisions and gain a competitive edge in their respective industries.

H2O is a powerful open-source machine learning platform that enables businesses to extract valuable insights from their data. It offers a range of advanced analytical capabilities, making it possible to analyze large datasets and create predictive models that can inform business decisions. H2O's user-friendly interface and robust feature set make it an ideal choice for companies looking to leverage the power of machine learning to gain a competitive edge. With H2O, businesses can unlock the full potential of their data and make smarter, data-driven decisions.

Google Cloud AutoML Vision is an advanced cloud-based platform that enables users to create custom machine learning models for various applications, including vision, natural language, and translation. This powerful tool provides a range of features that allow developers to customize their models according to their specific needs and goals. With Google Cloud AutoML Vision, businesses can easily enhance their operations by leveraging the power of AI and machine learning to automate tasks, improve efficiency, and drive growth. The platform is designed to be user-friendly, making it accessible to both technical and non-technical users alike.

H20 Driverless AI is an innovative platform that leverages advanced artificial intelligence to simplify the process of building machine learning models for data scientists and data-driven enterprises. With its user-friendly interface, this platform offers a comprehensive solution that enables users to create predictive models with ease and speed. By utilizing cutting-edge technology, H20 Driverless AI empowers organizations to make data-driven decisions and improve their business outcomes. In this paper, we will explore the features and benefits of this powerful tool and how it can help businesses stay ahead in today's competitive landscape.

Deep Dive is an innovative AI assistant designed to simplify the process of finding information in large sets of data. With its advanced algorithms and machine learning capabilities, Deep Dive offers a unique solution for individuals and businesses who struggle with data management. By providing swift access to relevant data, Deep Dive streamlines decision making and enhances productivity. Whether you're looking for insights on market trends or trying to identify patterns in customer behavior, Deep Dive has got you covered.

Shutterstock.AI (Upcoming)

AI Image Generator | Instant Text to Image | Shutterstock

Duolingo

Duolingo: Learn Spanish, French and other languages for free

Opera

Browser with Built-in VPN

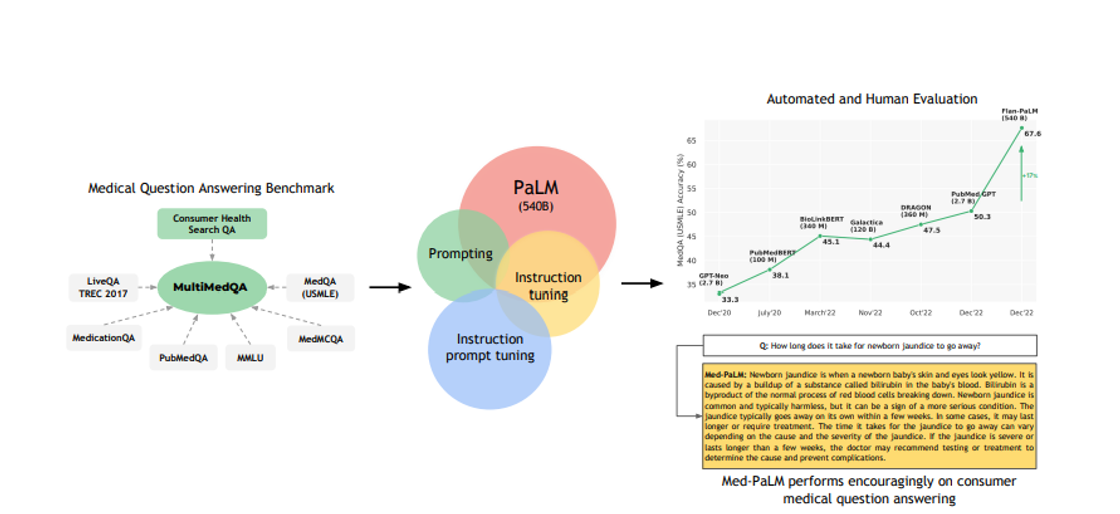

Med-PaLM

AI Powered Medical Imaging

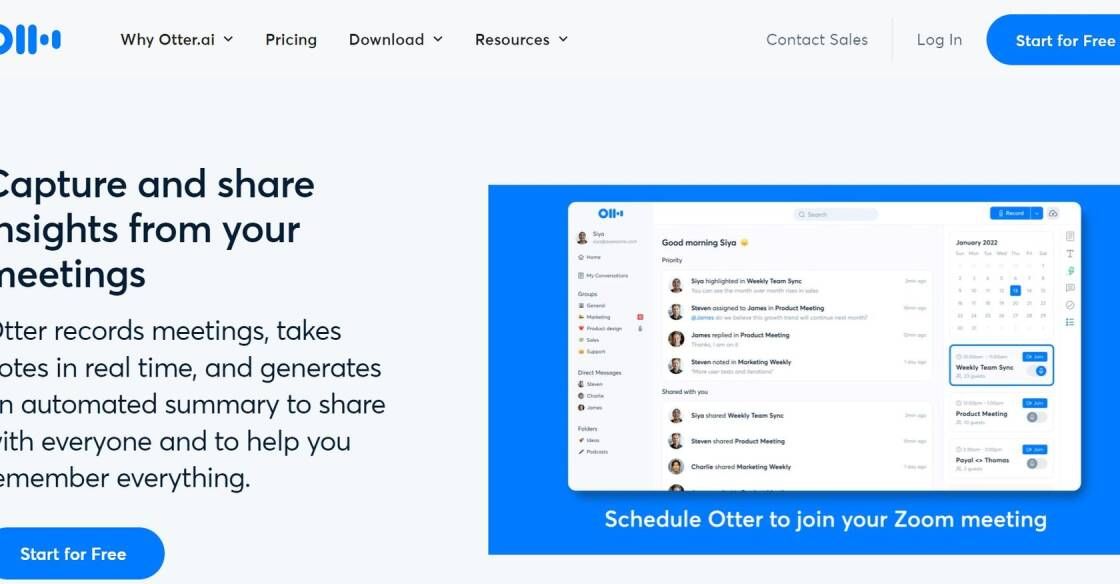

Otter AI

AI-Powered Transcription and Meeting Notes

Picsart

AI Writer - Create premium copy for free | Quicktools by Picsart

Deepfake AI Negotiation With DoNotPay

Negotiate with scammers and spammers on your behalf

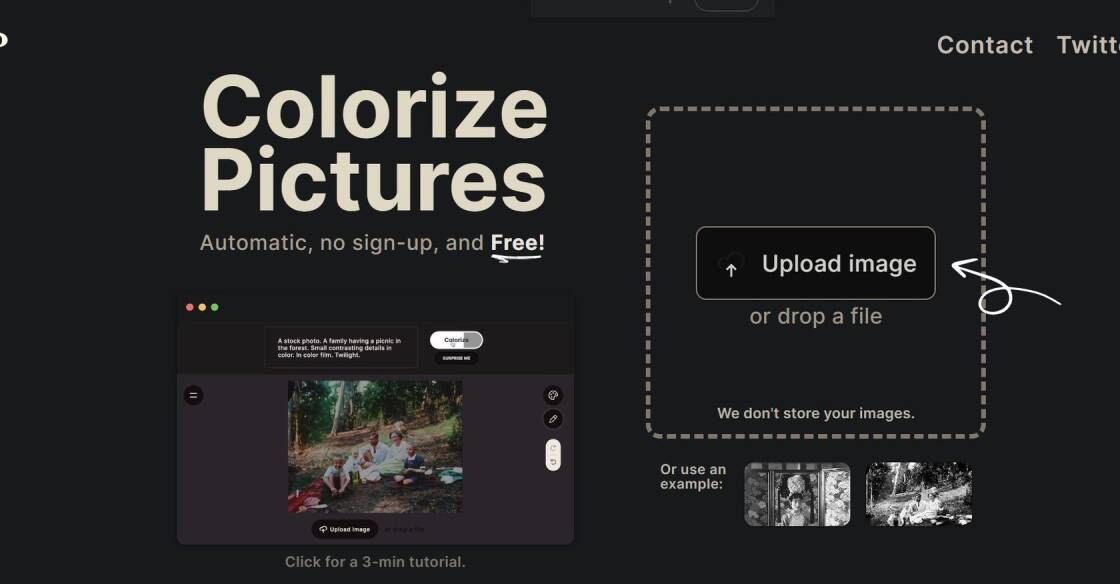

Palette.fm

AI Generated Music for Your Projects

Apache Hadoop is a powerful and popular open-source software framework that enables organizations to store, process, and analyze large amounts of data on clusters of commodity hardware. It provides a cost-effective solution for managing big data and offers an easy-to-use programming model that allows developers to build complex applications. The framework is used by many companies to handle various types of data, including structured, semi-structured, and unstructured data. Apache Hadoop has become a critical technology for businesses that want to gain insights from their data and make informed decisions. With its massive storage capacity and efficient processing capabilities, Apache Hadoop has transformed the way organizations manage and utilize their data. This introduction will explore the features and benefits of Apache Hadoop, as well as its applications and use cases in different industries.

Apache Hadoop is an open-source software framework designed for storing data and running applications on clusters of commodity hardware.

The primary purpose of Apache Hadoop is to provide massive storage for all kinds of data, along with a simple programming model for processing and analyzing large datasets.

Apache Hadoop can run on clusters of commodity hardware, which means it can run on inexpensive, off-the-shelf hardware components.

Some benefits of using Apache Hadoop include its ability to store and process large amounts of data quickly and efficiently and its simple programming model that makes it easy to work with large datasets.

A variety of programming languages can be used with Apache Hadoop, including Java, Python, and Scala.

Yes, Apache Hadoop can be used for real-time data processing, but it requires additional tools and components such as Apache Spark or Apache Storm.

While Apache Hadoop may have a steep learning curve for some users, it provides extensive documentation and resources to help users understand and use its features.

Many large organizations and companies use Apache Hadoop, including Yahoo, Facebook, eBay, and LinkedIn.

Yes, Apache Hadoop can work with cloud services such as Amazon Web Services (AWS) and Microsoft Azure.

Yes, Apache Hadoop is free and open-source software, meaning anyone can use, modify, and distribute it without cost.

| Competitor | Description | Key Differences |

|---|---|---|

| Apache Spark | An open-source distributed computing system used for big data processing and analytics. | Spark has faster processing speeds than Hadoop due to its in-memory processing capabilities. It also has a more flexible programming model and supports streaming data processing. |

| Cloudera | A commercial platform built on top of Apache Hadoop that offers enterprise-level support, management, and security features. | Cloudera provides additional support and management features not available in the open-source Hadoop version, making it more suitable for large enterprises with complex data environments. |

| Hortonworks | Another commercial platform built on top of Apache Hadoop that offers enterprise-level support, management, and security features. | Similar to Cloudera, Hortonworks provides additional support and management features not available in the open-source Hadoop version, making it more suitable for large enterprises with complex data environments. |

Apache Hadoop is a powerful open-source software framework that enables organizations to store and process large amounts of data across a cluster of commodity hardware. The framework provides a distributed file system that can store data of any kind, including structured, semi-structured, and unstructured data.

With Apache Hadoop, users have access to a simple programming interface that allows them to process and analyze large datasets in a scalable and efficient manner. This makes it an ideal solution for organizations that need to process big data, such as social media companies, financial institutions, and healthcare providers.

One of the key benefits of Apache Hadoop is its ability to scale horizontally. This means that it can easily handle increasing amounts of data by adding more nodes to the cluster. Additionally, the framework is fault-tolerant, ensuring that data is always available even in the event of hardware failure.

Another advantage of Apache Hadoop is its flexibility. The framework supports a wide range of applications and programming languages, including Java, Python, and R. This means that developers can choose the tools and technologies that best suit their needs.

In summary, Apache Hadoop is a powerful and flexible solution for managing large amounts of data. It provides organizations with the ability to store and process data at a massive scale, while also offering a simple programming model and fault-tolerant architecture.

TOP