Automate your documents within minutes and reduce tedious, repetitive tasks. Optimize your business with an all-in-one contract and workflow automation solution. #1 Documents & web forms 📝 #2 No-code visual workflows 🚀 #3 Pipeline automation ⚙️

Pythagora is a cutting-edge automated tool for integration testing that revolutionizes the way developers test their server applications. Unlike traditional testing methods, Pythagora does not require users to write any code. Instead, it analyzes server activity and creates tests based on the data obtained. The tool is incredibly user-friendly, requiring only a simple installation with npm, followed by a capturing command, then a test command. With Pythagora, developers can ensure that their applications are functioning optimally without the hassle of manual testing.

Form.com is an innovative AI-driven platform designed to automate forms and surveys, minimizing human errors, saving valuable time and money, and enhancing customer experiences. The platform leverages cutting-edge technology to streamline data collection, processing, and analysis, providing businesses with actionable insights to improve their operations and customer engagement. With its user-friendly interface and powerful features, Form.com simplifies the form and survey creation process while ensuring accuracy and completeness. This platform is a game-changer for organizations looking to optimize their workflows, reduce costs, and enhance customer satisfaction.

Google Cloud Platform is a revolutionary suite of cloud computing services that offers users the ability to build, deploy, and manage various applications. It provides an extensive range of tools and features that cater to businesses of all sizes, thereby ensuring a seamless and efficient workflow. Google Cloud Platform is designed to deliver high-performance computing resources, storage, and networking capabilities while adhering to stringent security protocols. With its flexibility and scalability, it has become the go-to choice for many organizations that require reliable and cost-effective cloud solutions.

Flexberry AI Assistant is a revolutionary tool that has been specifically designed to cater to the needs of business analysts. It aims to reduce the time spent on processing requirements and generating artifacts by automatically extracting information from natural language and structuring it into categories. The AI assistant also builds project metadata, generates diagrams and prototypes, and analyses statements and requirements for completeness. With its advanced capabilities, Flexberry AI Assistant is set to transform the way in which business analysts work, enabling them to be more efficient and productive.

Diffblue is an AI-powered unit testing platform that helps software developers test their code with greater accuracy and efficiency. It provides developers with the ability to quickly and easily generate automated tests for their code, thus reducing time and cost associated with manual testing. Diffblue's AI technology also enables developers to uncover more bugs and edge cases, thus improving the overall quality of their code.

GPT-3 Road Trip Plans For 2021 By CarMax

AI Plans a Road Trip | CarMax

YouChat

AI Chatbot Builder

Wolframalpha

Wolfram|Alpha: Computational Intelligence

RestorePhotos

Face Photo Restorer

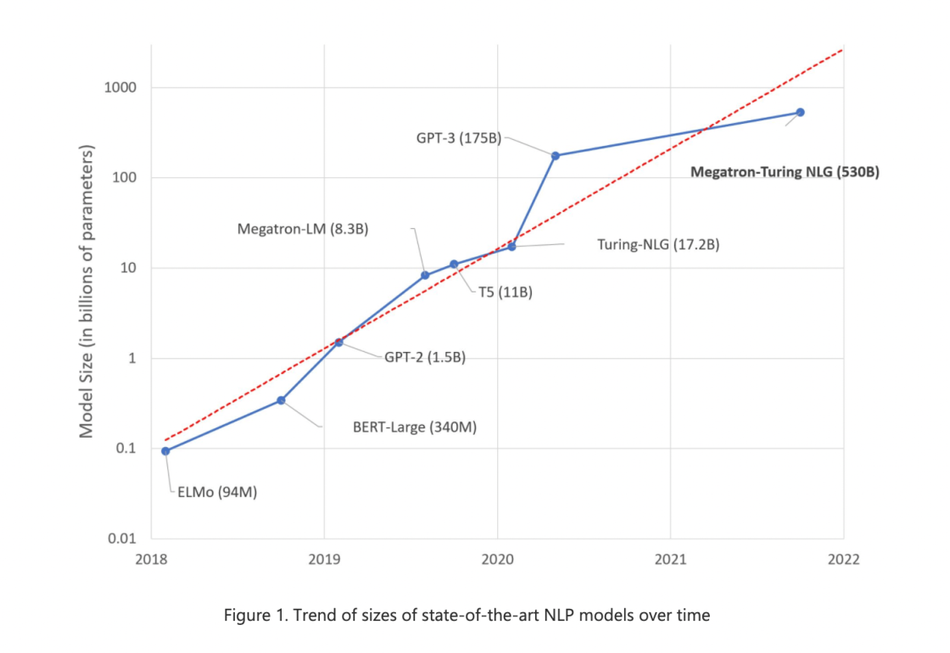

Megatron NLG

Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, the World’s Largest and Most Powerful Generative Language Model | NVIDIA Technical Blog

TwitterBio

AI Twitter Bio Generator – Vercel

Make (fomerly Known As Integromat)

Automation Platform

Nijijourney

NijiJourney AI for the anime fans. The new niji model is tuned with a fine eye to produce anime and illustrative styles. It has vastly more knowledge of anime, anime styles, and anime aesthetics. It's great at dynamic and action shots, and character-focused compositions in general.

Apache Spark is a powerful open-source distributed system that has revolutionized the way data is processed and analyzed. It was originally developed at the University of California, Berkeley's AMPLab in 2009 and later donated to the Apache Software Foundation in 2013. Since then, it has become one of the most popular big data processing engines, offering a fast, flexible, and efficient way to handle large-scale data processing tasks. With its high-performance computing capabilities, Spark can process large amounts of data up to 100 times faster than traditional Hadoop MapReduce. The system is built on top of the Hadoop Distributed File System (HDFS) and provides APIs for various languages such as Java, Scala, Python, R, and SQL. Spark is also highly versatile and can be used for batch processing, stream processing, machine learning, and graph processing. It has gained wide adoption across various industries, including finance, healthcare, retail, and telecommunications, making it an essential tool for any organization dealing with large-scale data processing and analysis.

Apache Spark is an open source distributed system for data processing and analysis that can handle large-scale data processing in real-time.

Apache Spark offers several benefits such as faster processing speed, fault tolerance, and support for multiple programming languages.

Apache Spark supports several programming languages, including Python, Java, Scala, R, and SQL.

Apache Spark is a faster and more flexible alternative to Hadoop, it can process data in real-time and offers support for multiple programming languages.

Apache Spark can be used for various types of data processing, including batch processing, stream processing, machine learning, graph processing, and SQL-based processing.

Apache Spark uses a distributed computing model, dividing large datasets into smaller chunks that can be processed in parallel across multiple nodes.

Yes, Apache Spark can be used for real-time processing through its stream processing capabilities.

Apache Spark can have a steep learning curve, especially for beginners. However, there are many resources available online for learning and mastering the technology.

Many large companies use Apache Spark for their data processing needs, including Netflix, IBM, Yahoo, and eBay.

Yes, Apache Spark is an open source project and is free to use. However, some commercial distributions of Apache Spark may require a license fee.

| Apache Spark | Apache Flink | Apache Hadoop | IBM InfoSphere BigInsights |

|---|---|---|---|

| Open source distributed system for data processing and analysis | Open source stream processing framework | Open source big data processing framework | Commercial big data analytics platform |

| Written in Scala, Java, Python and R | Written in Java and Scala | Written in Java | Written in Java |

| Supports batch processing, streaming, machine learning and graph processing | Supports stream processing and batch processing | Supports batch processing and real-time processing | Supports batch processing and real-time processing |

| Provides high-level APIs in Java, Scala and Python | Provides APIs in Java and Scala | Provides APIs in Java | Provides APIs in Java |

| Has a strong community support | Has a growing community support | Has a strong community support | Has a commercial support |

Apache Spark is an open-source distributed system that is designed for data processing and analysis. It is a fast and powerful engine that can process large amounts of data in real-time. Spark has become one of the most popular big data processing frameworks due to its ease of use, flexibility, and scalability. Here are some essential things you should know about Apache Spark:

1. Spark is built for speed: Spark is designed to be faster than Hadoop MapReduce for batch processing, SQL queries, and streaming analytics. It achieves this by using in-memory caching and optimized query execution plans.

2. It supports multiple languages: Spark provides APIs for programming in Java, Scala, Python, and R. This allows developers to choose the language they are most comfortable with while still being able to use Spark.

3. It has a wide range of use cases: Spark can be used for various data processing tasks such as batch processing, real-time processing, machine learning, graph processing, and more. It is suitable for both small and large datasets.

4. Spark runs on a cluster: Spark can run on a cluster of machines, making it easy to scale horizontally. It uses a master-slave architecture where a driver program runs on a master node, and worker nodes execute the tasks.

5. It has a rich ecosystem: Spark has a vast ecosystem of libraries and tools such as Spark SQL, Spark Streaming, MLlib, GraphX, and more. These libraries make it easy to perform complex data processing tasks.

6. Spark supports multiple data sources: Spark can process data from various sources, including Hadoop Distributed File System (HDFS), Cassandra, Amazon S3, and more. It also supports different data formats such as Parquet, Avro, and JSON.

7. It has an active community: Spark has a large and active community of contributors and users, making it easy to get help and support when needed. The community regularly releases new versions with bug fixes and new features.

In conclusion, Apache Spark is a powerful and flexible distributed system for data processing and analysis. It has become the go-to framework for big data processing due to its speed, scalability, and ease of use. With its vast ecosystem of libraries and tools, Spark can handle a wide range of data processing tasks, making it an essential tool for data scientists and developers.

TOP