AnalyzeMe is a cutting-edge AI tool designed to empower users with the ability to effortlessly and conveniently conduct PEST analysis. This innovative application functions by simply requesting users to input their industry or areas of interest. Through its advanced algorithms, AnalyzeMe then delivers a comprehensive analysis of the market environment. By providing valuable insights and detailed information, this tool allows users to make informed decisions and stay ahead in today's dynamic business landscape. With AnalyzeMe, conducting a thorough PEST analysis has never been easier or more accessible.

The Centre for AI Safety (CAIS) is a non-profit organization dedicated to advancing AI safety. As artificial intelligence continues to evolve and influence various aspects of our lives, ensuring its safe development becomes increasingly crucial. CAIS recognizes the potential risks posed by AI systems and aims to address them through rigorous research, innovative solutions, and collaborations with industry leaders. By promoting responsible and ethical practices in the field of AI, CAIS strives to protect society from potential harm and create a future where AI technology coexists safely with humanity.

The EU AI Act, also known as the Artificial Intelligence Act, is a significant legislative proposal aimed at regulating the use of artificial intelligence within the European Union. This comprehensive framework seeks to strike a balance between fostering innovation and protecting fundamental rights, safety, and ethical considerations. By addressing issues such as transparency, accountability, and human oversight, the EU AI Act aims to ensure that AI technologies are developed and deployed in a responsible and trustworthy manner. With its potential to affect various sectors and aspects of society, this act represents a crucial step towards establishing a robust regulatory framework for AI within the European Union.

The AI SWOT Analysis Generator is a powerful tool that can help companies create a comprehensive analysis of their organization. Using artificial intelligence, the online tool creates a SWOT analysis that outlines the organization's internal and external factors that could impact its success. SWOT stands for Strengths, Weaknesses, Opportunities, and Threats, which are essential factors that can affect any company or project. This innovative technology enables organizations to identify their strengths and weaknesses and discover opportunities and threats, allowing them to make strategic decisions that can improve their overall performance.

DecisionMentor is an innovative multi-criteria decision-making application that offers users a novel approach to making complex decisions in both public and private settings. The software utilizes advanced artificial intelligence (AI) algorithms to provide chat-based suggestions for a wide range of decision-making problems. With DecisionMentor, users can simplify the often-overwhelming task of decision-making with a scientifically-backed approach that makes it both fun and enjoyable. Whether you need help deciding which car to buy, which school to attend, or which investment opportunity to pursue, DecisionMentor has got you covered.

Oogway is an AI-powered decision assistant designed to help people make informed decisions quickly and accurately. Powered by GPT-3, the latest in advanced natural language processing technology, Oogway is able to understand complex conversations and respond with personalized advice and recommendations based on the users’ unique situation. Oogway is a reliable assistant that can provide the best advice for any decision you need to make.

AI Roguelite

AI Roguelite on Steam

GPT-3 Road Trip Plans For 2021 By CarMax

AI Plans a Road Trip | CarMax

MarioGPT

AI-generated Super Mario Levels

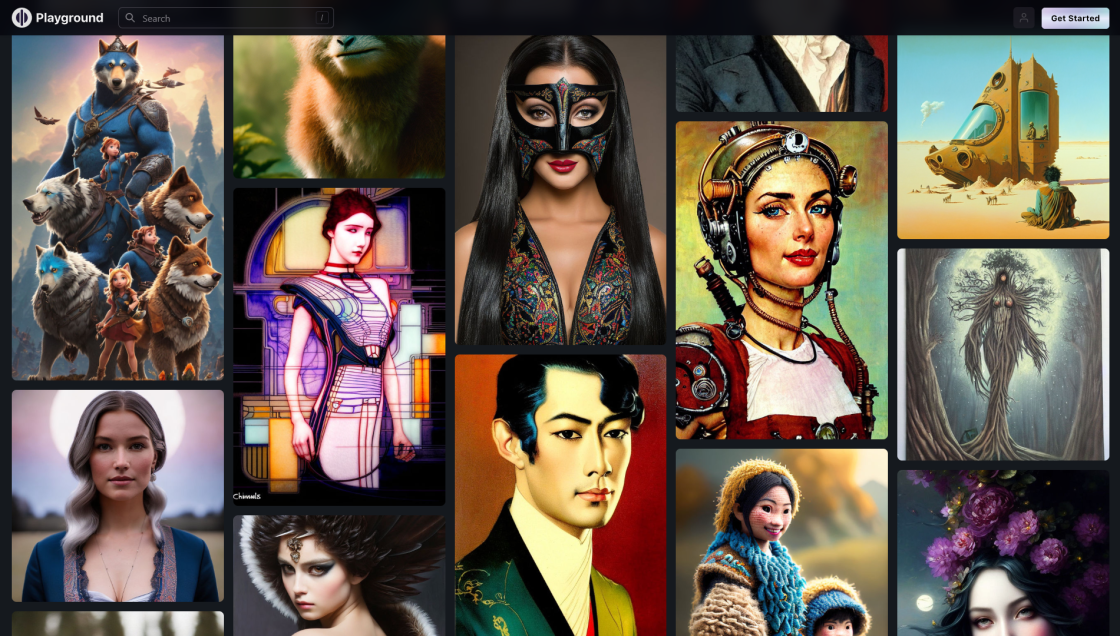

Playground AI

AI-Generated Music

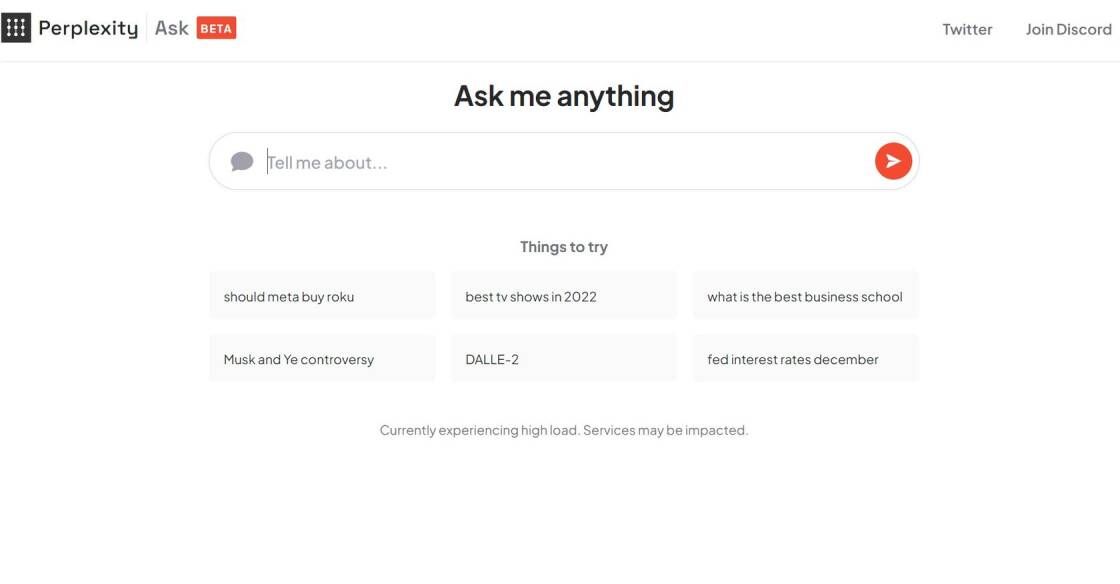

Perplexity AI

Building Smarter AI

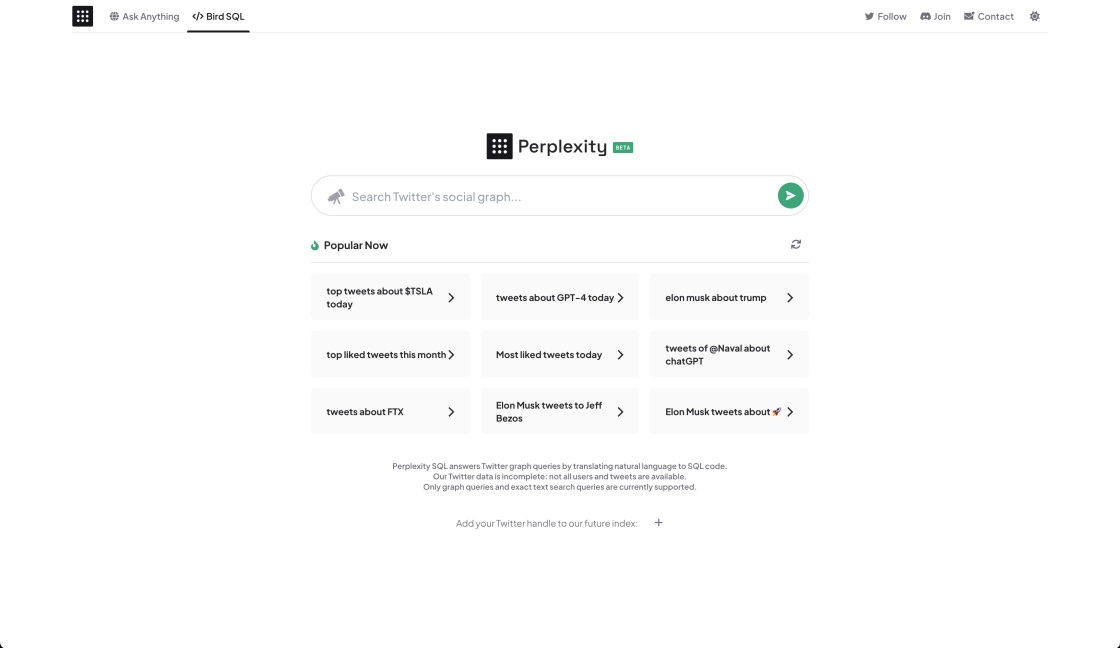

Perplexity AI: Bird SQL

A Twitter search interface that is powered by Perplexity’s structured search engine

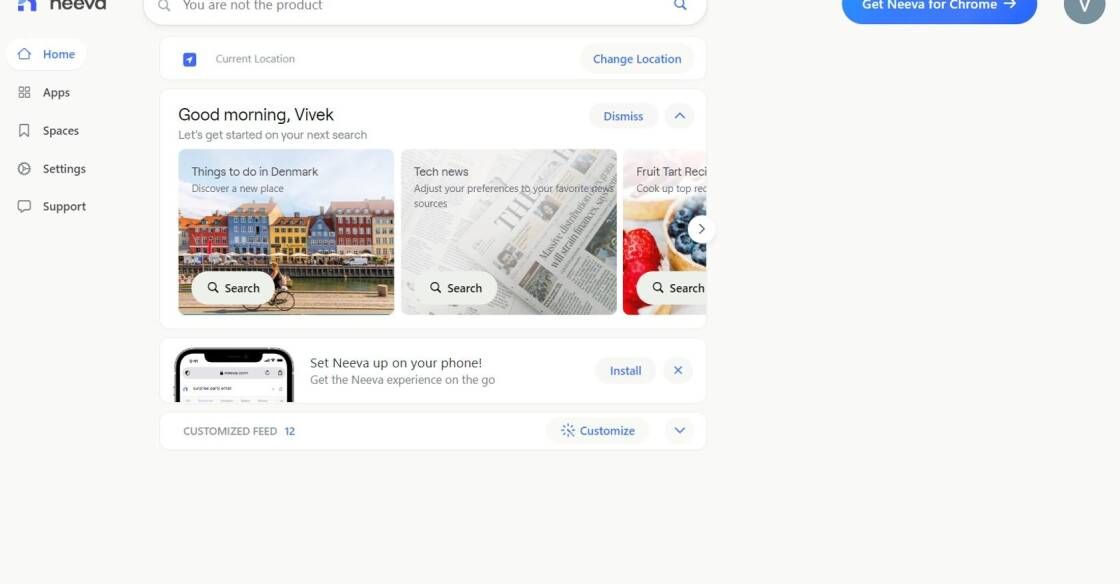

NeevaAI

The Future of Search

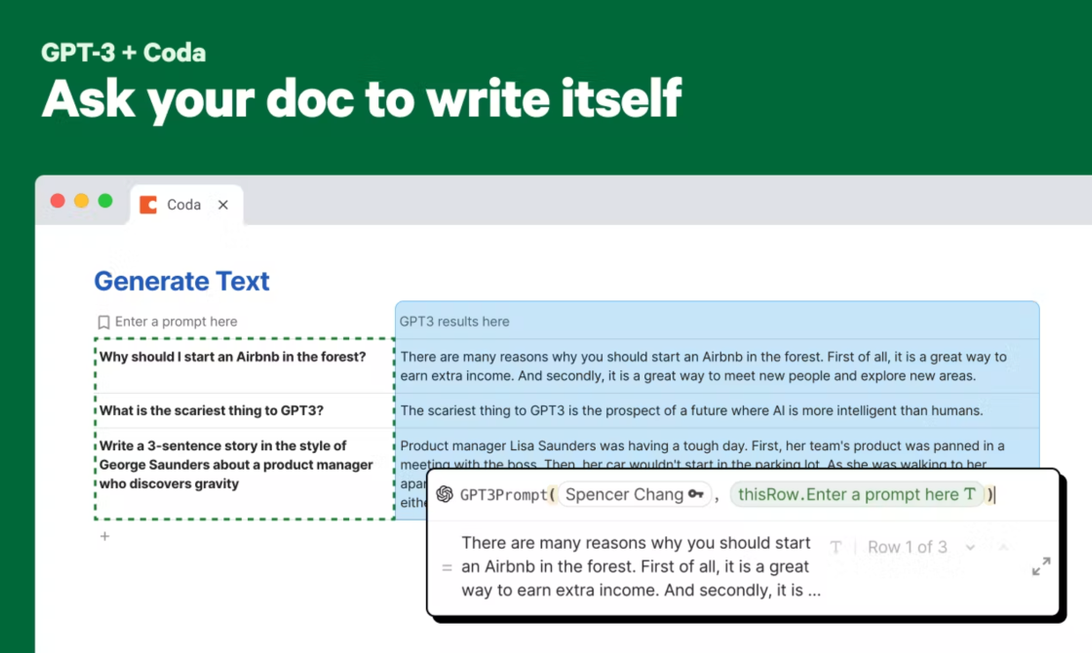

OpenAI For Coda

Automate hours of busywork in seconds with GPT-3 and DALL-E.

The Centre for AI Safety (CAIS) emerges as a prominent non-profit organization dedicated to the crucial field of AI safety. With an unwavering commitment to address the potential risks associated with artificial intelligence, CAIS aims to promote the safe development and deployment of advanced AI technologies. Through their comprehensive research, advocacy, and collaboration efforts, CAIS strives to foster a responsible and ethical approach towards the growth of AI systems.

As the field of artificial intelligence continues to rapidly advance, the need for ensuring its safety becomes increasingly vital. CAIS acknowledges this urgency and serves as a reliable platform for various stakeholders, including researchers, policymakers, and industry leaders, to come together and prioritize the safety challenges associated with AI. By acting as a catalyst for thought-provoking discussions and generating innovative solutions, CAIS plays a pivotal role in shaping the future of AI safety.

CAIS's interdisciplinary team of experts is at the forefront of cutting-edge research, exploring diverse facets of AI safety. Their work encompasses understanding and mitigating risks related to machine learning, reinforcement learning algorithms, and autonomous systems. Through rigorous analysis and testing methodologies, CAIS strives to instill confidence in the public and build trust in the potential benefits that AI can bring while minimizing any potential harm.

Furthermore, CAIS actively engages in outreach programs, public awareness campaigns, and policy advocacy initiatives to ensure that the broader society remains informed about the importance of AI safety. By collaborating with governments, AI developers, and other stakeholders, CAIS aims to create a shared understanding of the potential risks and establish robust safety standards and protocols.

In summary, the Centre for AI Safety (CAIS) stands as a prominent non-profit organization dedicated to advancing AI safety. Through their comprehensive research, advocacy, and collaboration efforts, CAIS aims to address the critical challenges associated with AI and promote the responsible and safe development of advanced AI technologies.

The Centre for AI Safety (CAIS) is a non-profit organization dedicated to ensuring the safety and security of artificial intelligence (AI) systems.

CAIS's mission is to promote research and advocacy in the field of AI safety, with the goal of mitigating potential risks associated with advanced AI technologies.

AI safety is crucial because as AI systems become more autonomous and capable, it is essential to ensure they are designed and programmed in a way that prioritizes human safety and aligns with our values.

CAIS contributes to AI safety through conducting research, organizing conferences, and collaborating with experts and organizations in the field. They also strive to raise awareness about the importance of AI safety.

CAIS operates independently as a non-profit organization and is not directly affiliated with any specific company or institution. However, they collaborate with various stakeholders in the AI community.

Individuals can support CAIS by staying informed about AI safety issues, attending their events, and donating to help fund their research and advocacy initiatives.

CAIS's work benefits a wide range of stakeholders, including researchers, policymakers, AI developers, and the general public. Ensuring AI systems are safe and aligned with human values is in everyone's interest.

Some potential risks include unintended harmful behavior, loss of control over AI systems, unequal distribution of benefits, and reinforcement of existing biases. Addressing these risks is a priority for organizations like CAIS.

CAIS actively engages in ethical discussions surrounding AI development, advocating for the inclusion of ethical considerations in the design and deployment of AI systems. They work towards developing frameworks and guidelines to ensure responsible AI practices.

CAIS encourages individuals interested in AI safety to get involved through various means, such as joining their mailing list, participating in conferences or workshops, and potentially collaborating on research projects.

| Competitor Name | Difference |

|---|---|

| OpenAI | Focused on developing safe and beneficial AI through advanced research and cooperation with other institutions. Additionally, OpenAI aims to ensure the broad distribution of benefits from AI technology. |

| Future of Humanity Institute (FHI) | Conducts interdisciplinary research to assess and mitigate potential risks associated with future technologies, including AI. Collaborates with policymakers to provide guidance on long-term AI safety. |

| Machine Intelligence Research Institute (MIRI) | Primarily focuses on technical research to ensure the development of AI systems that align with human values. Also trains AI researchers and collaborates with other organizations to address safety concerns. |

| Center for Human-Compatible AI (CHAI) | Aims to develop AI systems that are transparent and aligned with humans' preferences. Their research explores ways to make AI beneficial, robust, and provably aligned with human values. |

| Future of Life Institute (FLI) | Engages in advocacy and grants programs to create a positive impact on the development of AI technology. They prioritize AI safety and collaborate with various entities to promote responsible AI practices. |

| AI Safety Support | Provides technical resources, risk assessment tools, and support for researchers and organizations working on AI safety. Offers practical solutions and assists with addressing safety concerns throughout AI development. |

The Centre for AI Safety (CAIS) is a renowned non-profit organization dedicated to AI safety. With a strong focus on ensuring the safe development and deployment of artificial intelligence, this center is at the forefront of research and advocacy in the field.

One crucial aspect of CAIS's work is addressing the potential risks associated with advanced AI systems. As AI continues to evolve rapidly, it is essential to anticipate and mitigate any potential negative impacts or unintended consequences that could arise. CAIS employs a multidisciplinary approach, bringing together experts from various fields to tackle these challenges head-on.

Collaboration lies at the heart of CAIS's mission. Recognizing that addressing AI safety requires a collective effort, CAIS actively collaborates with other research institutions, industry leaders, and policymakers worldwide. By fostering partnerships and knowledge-sharing, they aim to create a robust ecosystem that promotes responsible and safe AI development.

Education and outreach initiatives are also integral to CAIS's agenda. They strive to raise awareness about AI safety among both technical and non-technical audiences. Public engagement programs, workshops, and conferences organized by CAIS facilitate dialogue and knowledge exchange, ensuring that the broader community stays informed about the latest advancements and challenges in AI safety.

In addition to research and education, CAIS actively engages in policy advocacy to shape the legal and ethical frameworks surrounding AI. They work closely with policymakers to promote the adoption of regulations that prioritize safety, fairness, and transparency in AI development. By advocating for responsible AI practices, CAIS aims to ensure that AI technologies are developed and deployed for the benefit of humanity.

Transparency is a core value at CAIS. In line with their commitment to openness, they openly share their research findings, methodologies, and best practices to facilitate collaboration and accelerate progress in the field. This approach enables others in the AI community to build upon their work and collectively advance AI safety research.

As an AI safety non-profit, CAIS plays a critical role in shaping the future of AI. By addressing the potential risks, fostering collaboration, educating the public, advocating for responsible policies, and promoting transparency, they strive to ensure that AI technologies are developed and deployed in a manner that prioritizes safety and aligns with human values.

TOP