AI Web App Generator: Effortless AI Text Apps! Simplify content generation, language processing & writing. No coding needed. Create easily on our web platform. Unleash AI's power in text apps. Beta version - your feedback matters. Experience it now!

AI Food Robot uses Stable Diffusion models and LLMs to curate a list of dishes and recipes.

SDL, a leading provider of translation and localization technology solutions, has been helping businesses around the world to communicate more effectively with their global audiences. With its innovative software and professional services, SDL offers a comprehensive suite of solutions that enable organizations to manage complex multilingual content and automate the translation process. Whether you need to translate technical manuals, marketing materials, or software applications, SDL has the expertise and technology to deliver high-quality translations quickly and efficiently. In this article, we will explore the key features and benefits of SDL's translation and localization solutions and why they are essential for any business looking to expand its global reach.

Yandex Translate is an online translation service that provides a comprehensive and user-friendly platform for translating text, speech, images, websites, and documents in over 90 languages. Powered by Yandex AI, the service offers accurate translations, making it an essential tool for individuals and businesses worldwide. With its wide range of capabilities and free access, Yandex Translate has become a popular choice for anyone seeking to communicate across languages without any barriers.

Google GLaM is a new and innovative language model used to better understand natural language. This model is revolutionary in its ability to capture the nuances of the language, making it more effective for text-based tasks like summarization and translation. It is a generalist language model, which means it is capable of recognizing a wide range of language structures. With its state-of-the-art features, Google GLaM can process different languages, dialects, and domains with ease. It is a powerful tool that can help improve machine learning and natural language processing.

The GLM-130B is an open bilingual pre-trained model that allows users to quickly gain access to a variety of natural language processing (NLP) capabilities. It is specifically designed to bridge the gap between English and Spanish and provides a powerful tool for machine translation, text classification, and other NLP tasks. With its open architecture and easy integration, the GLM-130B is an ideal choice for developers seeking to take their projects to the next level.

GPT-3 × Figma Plugin

AI Powered Design

Namecheap Logo Maker

AI Powered Logo Creation

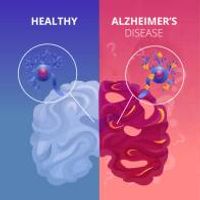

GPT-3 Alzheimer

Predicting dementia from spontaneous speech using large language models | PLOS Digital Health

PlaygroundAI

A free-to-use online AI image creator

Speechify

Best Free Text To Speech Voice Reader | Speechify

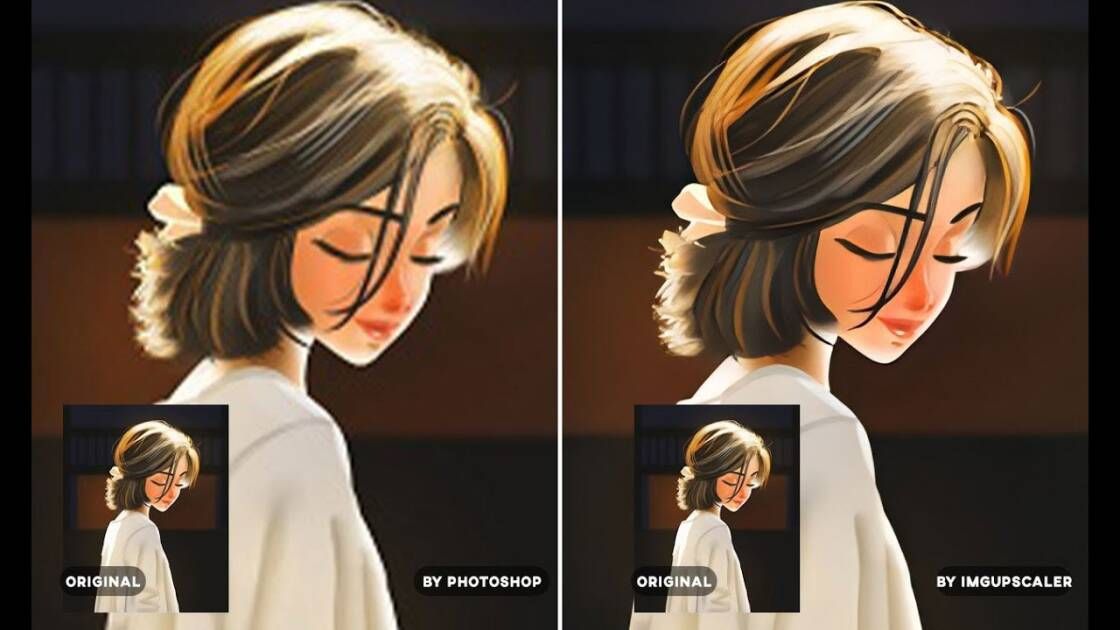

Img Upscaler

AI Image Upscaler - Upscale Photo, Cartoons in Batch Free

Nijijourney

NijiJourney AI for the anime fans. The new niji model is tuned with a fine eye to produce anime and illustrative styles. It has vastly more knowledge of anime, anime styles, and anime aesthetics. It's great at dynamic and action shots, and character-focused compositions in general.

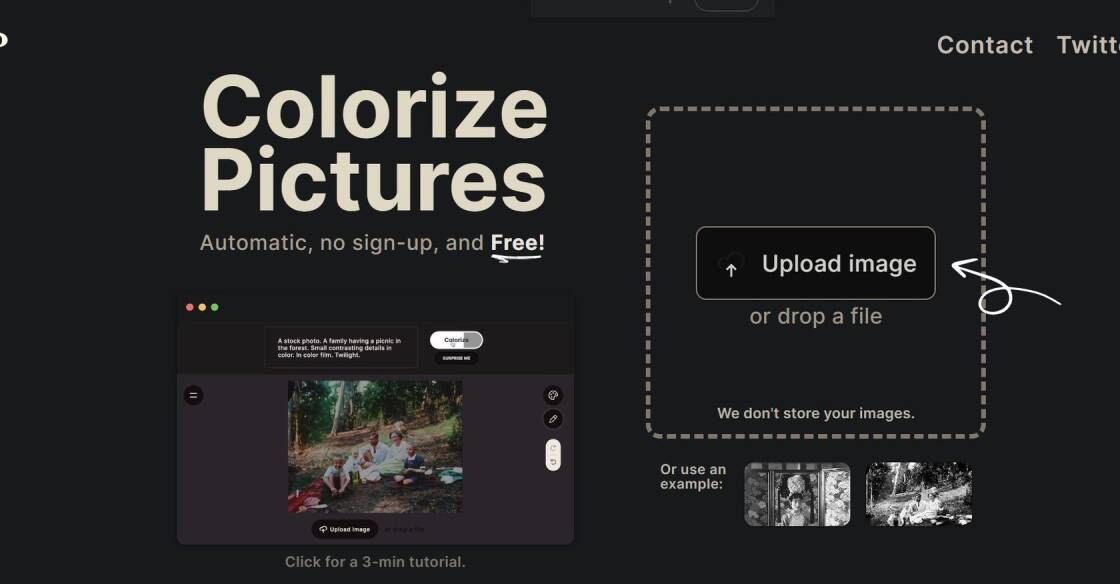

Palette.fm

AI Generated Music for Your Projects

Language models are an important part of artificial intelligence (AI) and natural language processing (NLP). In recent years, there has been a great deal of progress in improving language models. One of the most important developments in this field is the DeepMind RETRO system. This system has the unique ability to improve language models by retrieving and analyzing trillions of tokens from large collections of natural language data. The DeepMind RETRO system is based on a combination of reinforcement learning and unsupervised learning techniques. This enables the system to learn from past experiences and to use this knowledge to better understand language. By leveraging the power of deep learning and natural language processing, DeepMind RETRO has the potential to revolutionize the way we use language in AI applications. Through its ability to retrieve and analyze large amounts of language data, it can help to build more accurate language models that can be used for a variety of tasks.

DeepMind RETRO is a research project developed by DeepMind to improve language models by retrieving data from trillions of tokens.

DeepMind RETRO uses a combination of natural language processing and machine learning algorithms to search for patterns in billions of tokens to develop more accurate language models.

DeepMind RETRO uses both traditional statistical language models and modern neural network language models.

The purpose of DeepMind RETRO is to improve existing language models by leveraging the trillions of tokens available on the web.

Using DeepMind RETRO can help make language models more accurate, efficient, and easier to use.

DeepMind RETRO has been in development since 2019.

Yes, DeepMind RETRO works with both traditional statistical language models and modern neural network language models.

No, DeepMind RETRO is not open source.

DeepMind RETRO retrieves data from trillions of tokens on the web.

No, there is no cost associated with using DeepMind RETRO.

| Competitor | Difference |

|---|---|

| OpenAI GPT-3 | OpenAI GPT-3 is a language model that uses a different approach to training, focusing on a larger number of parameters and using more sophisticated techniques such as reinforcement learning. |

| Google BERT | Google BERT is a language model that uses a bidirectional approach, taking into account context from both the left and right sides of a sentence. Additionally, it employs a transformer architecture, allowing for more complex interactions between its components. |

| Microsoft Transformer | Microsoft Transformer is a language model that uses a similar transformer architecture to Google BERT but relies on a smaller number of parameters and less sophisticated techniques. |

| Facebook Transformer | Facebook Transformer is a language model that uses a different approach to training than DeepMind RETRO, focusing more on large-scale pre-training and a wide range of NLP tasks. |

| AllenNLP ELMo | AllenNLP ELMo is a language model that uses a combination of deep contextualized word representations and bi-directional LSTM networks. It provides a deeper understanding of words by taking into account their context in a sentence. |

DeepMind’s RETRO (Retrieval-Augmented Language Model) is a new and innovative language model that combines the power of natural language processing with the ability to retrieve from trillions of tokens. The aim of RETRO is to improve on traditional language models and enable developers to create more accurate, efficient and powerful language models. By utilising the latest advances in deep learning and natural language processing, RETRO is able to provide improved accuracy and performance when compared to traditional language models.

DeepMind’s RETRO is based on the idea of retrieval-augmented language models, which combines natural language processing with the ability to retrieve from trillions of tokens. This enables developers to create more sophisticated and accurate language models, which can be used to automate tasks such as machine translation, text summarization and question answering. RETRO makes use of a combination of self-supervised learning, transfer learning and reinforcement learning to accurately predict the next word in a sentence or phrase. By utilising these technologies, developers are able to create more accurate language models than traditional models.

In addition, DeepMind’s RETRO uses a number of advanced features to improve its accuracy. For example, it utilises a number of different memory architectures to store information about previously seen words or phrases. This allows it to accurately predict future words or phrases. Furthermore, it is able to utilise transfer learning to leverage data from other languages, allowing it to make predictions for languages it has never seen before.

Finally, DeepMind’s RETRO has been designed to be highly scalable, allowing developers to easily scale up their language models without sacrificing accuracy or performance. This makes it an ideal tool for large scale projects such as machine translation, text summarization and question answering.

Overall, DeepMind’s RETRO is an innovative language model that combines natural language processing with the ability to retrieve from trillions of tokens. This allows developers to create more accurate and efficient language models, which can be used to automate tasks such as machine translation, text summarization and question answering. Furthermore, its scalability allows developers to easily scale up their language models without sacrificing accuracy or performance. For these reasons, DeepMind’s RETRO is an excellent choice for any developers looking to create powerful and accurate language models.

TOP