AI writing & research for Product Managers Write, Research 10X Faster AI for product managers. Write faster, research smarter, and make better product decisions.

AI UX Assistant elevates website user experience and conversion rate optimization. It provides in-depth UX reports and actionable CRO advice. Using AI, it translates data into clear insights, enhancing web performance and collaboration. youtube.com/watch?v=gi1WlPskVhg

Microsoft Kosmos-1 is a new and advanced large language model developed by Microsoft. It is a multimodal model that uses images, text, and audio to understand and generate human-like responses in various languages. The model is specifically designed to perform language-related tasks such as natural language processing, machine translation, text summarization, and question-answering. With an enormous amount of data and computational power, Microsoft Kosmos-1 has the potential to revolutionize the field of artificial intelligence and significantly improve human-machine interactions. In this article, we will explore the features and capabilities of this groundbreaking language model.

The field of natural language processing is constantly evolving, with breakthroughs such as GPT-4 from OpenAI setting new precedents. GPT-4 is the latest and greatest large multimodal model from OpenAI that has been making waves in the AI community. With its state-of-the-art architecture, GPT-4 is capable of performing a variety of tasks including language translation, question-answering, text generation, and much more. This highly anticipated model promises to be a game-changer in the world of natural language processing, providing researchers and developers with powerful tools to push the boundaries of what's possible.

Yandex Translate is an online translation service that provides a comprehensive and user-friendly platform for translating text, speech, images, websites, and documents in over 90 languages. Powered by Yandex AI, the service offers accurate translations, making it an essential tool for individuals and businesses worldwide. With its wide range of capabilities and free access, Yandex Translate has become a popular choice for anyone seeking to communicate across languages without any barriers.

Turing-NLG is an innovative Natural Language Generation (NLG) system developed by Microsoft as part of the Project Turing initiative. It enables machines to generate human-like natural language from structured data. This technology has the potential to revolutionize the way humans interact with machines and how machines process information. With its ability to quickly generate natural language output, it can provide users with more accurate and meaningful interpretations of data. This technology could be used in a variety of applications such as personal assistants, search engines, and automated customer service agents.

Alien Genesys

AI Powered DNA Analysis

GPT-3 Road Trip Plans For 2021 By CarMax

AI Plans a Road Trip | CarMax

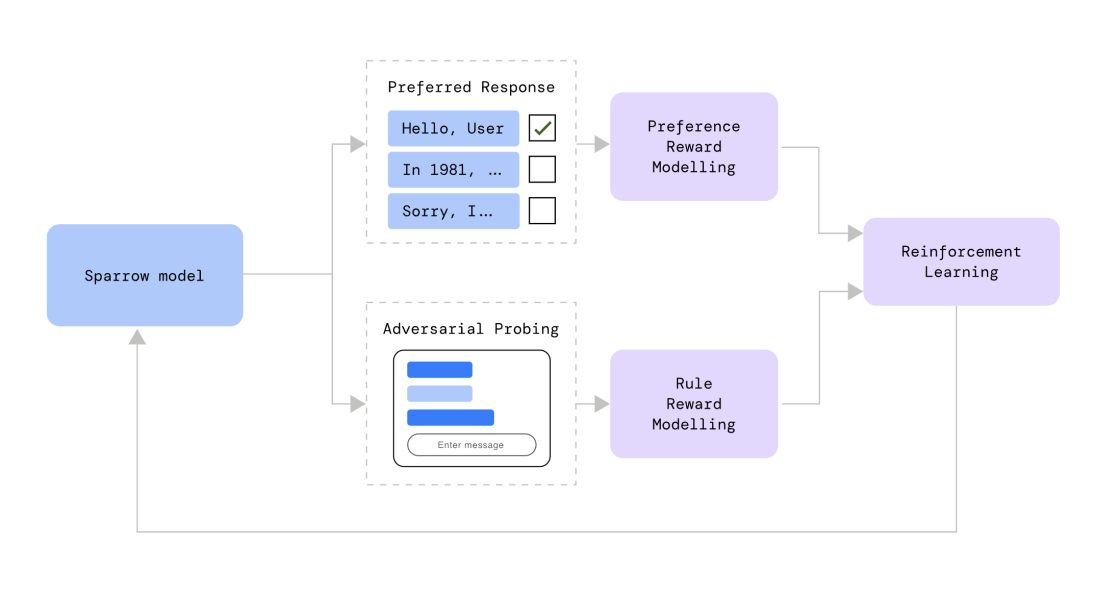

Deepmind Sparrow AI

[2209.14375] Improving alignment of dialogue agents via targeted human judgements

Google GShard

[2006.16668] GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding

Lexica

The Stable Diffusion search engine.

Remini

Remini - AI Photo Enhancer

Riffusion

Riffusion generates music from text prompts. Try your favorite styles, instruments like saxophone or violin, modifiers like arabic or jamaican, genres like jazz or gospel, sounds like church bells or rain, or any combination

Palette.fm

AI Generated Music for Your Projects

DistilBERT is a revolutionary new approach to natural language processing (NLP) that has been gaining traction in recent years. DistilBERT is a distilled version of BERT, a popular NLP model developed by Google in 2018. DistilBERT is smaller, faster, cheaper and lighter than the original BERT and provides a powerful way of understanding natural language. It has been shown to achieve higher performance than its predecessor in various tasks such as question answering, sentiment analysis and natural language inference. Unlike BERT, DistilBERT can be trained on small datasets and requires fewer parameters and resources. This makes it ideal for applications where speed and efficiency are important. In addition, DistilBERT can be used as a powerful feature extractor in transfer learning scenarios, as it can capture subtle linguistic patterns in the data. With its impressive performance, DistilBERT is quickly becoming the go-to choice for many NLP tasks.

DistilBERT is a distilled version of BERT, which is smaller, faster, cheaper and lighter.

DistilBERT is smaller, faster, cheaper and lighter than BERT.

DistilBERT has the advantages of being smaller, faster, cheaper and lighter than BERT.

DistilBERT achieves its smaller size by distilling knowledge from BERT, using techniques such as knowledge distillation and model compression.

DistilBERT is usually 2-3x faster than BERT, depending on the task.

Yes, DistilBERT is usually cheaper than BERT, since it requires fewer resources to run.

Yes, DistilBERT is usually lighter than BERT, since it has fewer parameters.

Yes, DistilBERT can be used for the same tasks as BERT, but it may not perform as well.

It depends on the task. In some cases, DistilBERT may provide better performance than BERT, but in other cases BERT may be more suitable.

Yes, DistilBERT is easier to use than BERT, since it requires fewer resources and is faster.

| Competitor | Difference from DistilBERT |

|---|---|

| BERT | Larger, Slower, More Expensive and Heavier |

| RoBERTa | Smaller, Faster, Cheaper and Lighter |

| ALBERT | Smaller, Faster, Cheaper and Lighter |

| XLNet | Smaller, Faster, Cheaper and Lighter |

| XLM | Smaller, Faster, Cheaper and Lighter |

DistilBERT is a distilled version of the popular BERT (Bidirectional Encoder Representations from Transformers) language model. It offers a smaller, faster, cheaper, and lighter model than the original BERT. DistilBERT has fewer parameters than the original BERT model, thus making it easier to train and faster to execute. It also requires significantly less memory, making it more cost-effective and ideal for applications with limited resources. In addition, DistilBERT can be fine-tuned on a wide range of tasks such as question answering, natural language inference, sentiment analysis, and text classification.

The DistilBERT model is based on the same architecture as BERT and utilizes the same hyperparameters. However, to create DistilBERT, researchers at Huggingface used a knowledge distillation approach that compresses a larger, more powerful model (BERT) into a smaller one (DistilBERT). This technique allows the model to retain most of the performance of the larger model while achieving a much smaller size and faster inference time.

DistilBERT is already proving to be a powerful tool in natural language processing (NLP). It is being used in various research projects, and some companies are already using it to improve their models and services. For example, Microsoft has used DistilBERT to improve the performance of its question answering system.

In short, DistilBERT is an excellent option for those who need a smaller, faster, cheaper, and lighter model than BERT. It is ideal for applications that require a fast and efficient model, such as question answering systems or text classification tasks.

TOP