AI that suggests personalized gift ideas for anyone and everyone, no matter the occasion... For Free! The best part? Cele is no one-off. Create a profile, add members to Your Fam, and each time you use Cele, the better the AI gets for the next holiday!

Intento is an innovative platform that helps customers connect their applications to a wide range of Artificial Intelligence (AI) services. It provides a comprehensive solution for businesses seeking to enhance their operations with the latest advancements in AI technology. With Intento, customers can instantly access a variety of AI services and integrate them into their applications without the need for complex coding or technical expertise. This platform simplifies the process of leveraging AI capabilities and enables businesses to improve their performance and achieve their desired outcomes.

OpenAI GPT-3 API is an open-source application programming interface (API) that enables users to access pre-trained natural language models developed by OpenAI. The API offers an extensive range of language models, with applications in various fields such as chatbots, content creation, translation, and much more. GPT-3 API is designed to assist developers in creating AI-based solutions, providing them with access to state-of-the-art natural language processing algorithms. It's a powerful tool that can help businesses and individuals streamline their workflow and improve efficiency while maintaining the highest level of accuracy and precision.

NanoGPT is an exciting new tool that simplifies the process of training and finetuning medium-sized GPTs (Generative Pre-trained Transformer). This platform provides a simple and fast way to build powerful and reliable language models. It promises to be a valuable resource for both experienced developers and those just starting out with GPTs. With its intuitive interface, users can quickly create, test, and deploy their own GPT models. NanoGPT is the ideal resource for anyone interested in building a medium-sized GPT model.

GPT-2 is an advanced artificial intelligence model created by OpenAI, a research laboratory specializing in artificial intelligence development. It is a generative pre-trained transformer, meaning it has been trained on a huge amount of data to generate text that mimics human natural language. GPT-2 has the potential to be used in a wide range of applications, from creative writing to automated summarization of long documents. With its powerful capabilities, GPT-2 can help machines understand and interact with humans, making artificial intelligence more accessible.

DialogGPT is a powerful machine learning tool that enables large-scale pretraining for dialogue. This technology has the potential to revolutionize the way people communicate with computers, enabling more natural and fluid conversations. It leverages natural language processing (NLP) to understand the context of conversations and generate accurate and relevant responses. By leveraging large-scale pretraining, DialogGPT can greatly improve the accuracy of dialogue systems.

AI Roguelite

AI Roguelite on Steam

CharacterAI

Personality Insights and Predictive Analytics

Notes For ChatGPT

Notebook Web Clipper

MarioGPT

AI-generated Super Mario Levels

Perplexity AI: Bird SQL

A Twitter search interface that is powered by Perplexity’s structured search engine

AI Image Enlarger

AI Image Enlarger | Enlarge Image Without Losing Quality!

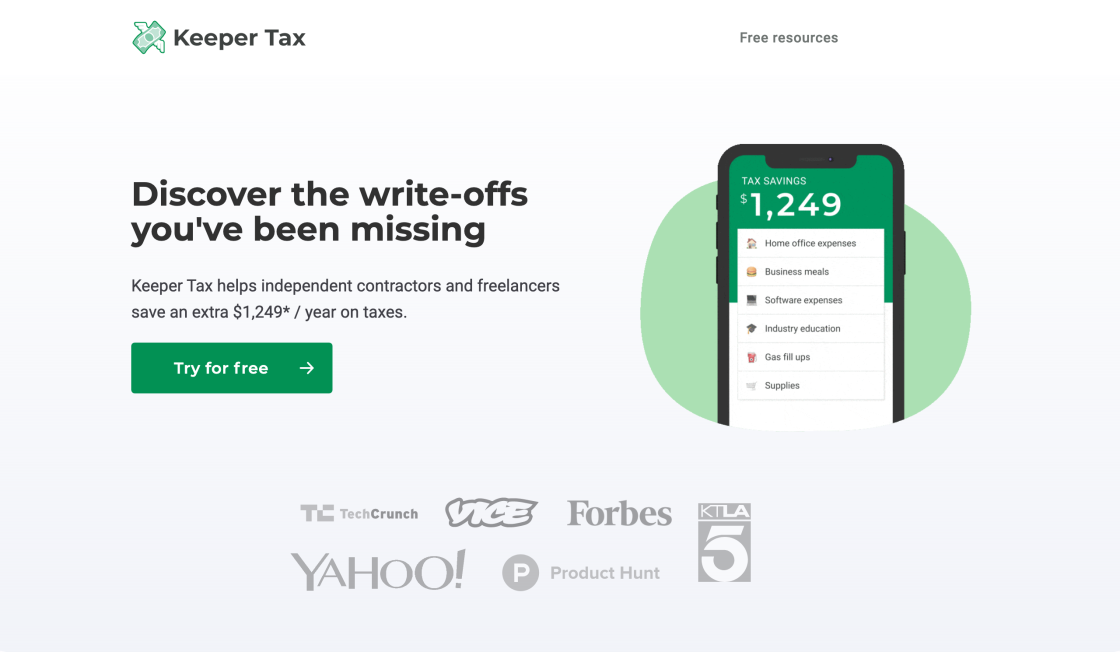

Keeper Tax

Keeper - Taxes made magical

Flowrite

Flowrite - Supercharge your daily communication

Google GShard is a revolutionary new system designed to enable efficient, large-scale training of deep learning models. By leveraging the power of conditional computation and automatic sharding, Google GShard enables training of very large models with more accuracy and efficiency than ever before. This breakthrough system has the potential to revolutionize the field of machine learning by allowing for the training of models that were previously impossible due to computational resource limitations. With Google GShard, researchers can now train models on datasets of unprecedented size, while still achieving the same or better accuracy than traditional methods. Furthermore, the system is highly scalable, meaning that it can be used to train increasingly larger models as technology progresses. The system also features automatic sharding, which ensures that each model is trained in an efficient way, and that the training process is not slowed down by the sheer size of the dataset. Google GShard is an exciting development in the field of machine learning, and will likely have a significant impact on the future of machine learning research and applications.

Google GShard is a system designed to allow for scaling of giant models with conditional computation and automatic sharding.

Google GShard can help to reduce both cost and latency of model training and inference, as well as enable the training of larger models with more parameters.

Google GShard works by automatically sharding large models into smaller chunks, which can then be processed in parallel on multiple machines. This helps to improve scalability and performance.

Google GShard can be used to process any type of model, including deep learning and machine learning models.

No, Google GShard is not an open source project, but it is available for use in Google Cloud Platform.

Yes, Google GShard is designed to scale with large models and can handle very large-scale projects.

Yes, Google GShard supports distributed machine learning and can make use of multiple machines for training and inference.

Yes, Google GShard can make use of GPUs to accelerate its computations.

Yes, there is a cost associated with using Google GShard, which is determined by your usage.

Yes, you can use the Google Cloud Platform monitoring tools to track the progress of your Google GShard jobs.

| Competitor | Difference |

|---|---|

| Microsoft Azure Machine Learning | Google GShard provides an open-source approach to scaling giant models with conditional computation and automatic sharding, whereas Azure Machine Learning does not. |

| Amazon SageMaker | Unlike Google GShard, Amazon SageMaker does not automatically shard models for scale. |

| IBM Watson Machine Learning | IBM Watson Machine Learning does not provide a feature for automatic sharding, which is a key feature of Google GShard. |

| TensorFlow Extended | TensorFlow Extended does not provide a built-in feature for automatic sharding like Google GShard offers. |

Google GShard is a powerful new tool for scaling giant models with conditional computation and automatic sharding. It is designed to help developers manage large-scale machine learning (ML) projects, especially those involving deep learning and natural language processing (NLP). With GShard, developers can easily partition their ML models across multiple machines in order to distribute the workload and reduce training times.

GShard allows for conditional computation, meaning that it can monitor and control which sections of a model are executing on each machine. This means that developers can allocate more resources to specific parts of their model that may be more computationally expensive, allowing them to achieve better results in less time.

GShard also supports automatic sharding, which helps to improve the scalability of ML models. Sharding divides a model into smaller sections and distributes them across multiple machines, so that they can be trained and processed more efficiently. This makes it easier to scale models up as more data is added, as well as to add new features as required.

Overall, Google GShard is an invaluable tool for developers working with large-scale ML projects. Its ability to provide conditional computation and automatic sharding makes it easier to scale models and optimize performance. Developers should take advantage of GShard when working on large and complex ML projects to ensure maximum efficiency and accuracy.

TOP