Access powerful Natural Language Processing (NLP) tools via a REST API. Free to sign up, you will gain access to categorization, topic tagging, Named entity recognition, disambiguation tools and much more.

AI Curious has ambitions to be the world's most valuable newsletter about artificial intelligence. Every weekday you will get: 3 findings, 2 tools and 1 more thing about AI. Stay AI-curious!

Localazy is an innovative platform that offers a comprehensive and automated solution for app localization. With Localazy, users can effortlessly translate their apps into over 100 languages, providing a more personalized experience for their global audience. The platform streamlines the localization process, allowing developers to focus on creating the best possible app without worrying about language barriers. Localazy's user-friendly interface and advanced features make it a top choice for businesses of all sizes looking to expand their reach in international markets.

LingQ is a comprehensive language learning platform that provides an array of courses, textbooks, and tutors for individuals seeking to acquire proficiency in various languages. The platform offers a flexible and personalized approach to learning, enabling learners to tailor their language learning experience to suit their goals and preferences. With LingQ, learners can access a vast library of materials, including podcasts, articles, and videos, in multiple languages, making it an ideal resource for both beginners and advanced learners. Additionally, LingQ's interactive community enables learners to connect with other language enthusiasts from around the world, further enriching their language learning journey.

Med-PaLM is an innovative new language model that has been tailored to the medical domain. It enables natural language processing (NLP) models to better understand medical terms and concepts. This breakthrough technology has the potential to revolutionize the way healthcare professionals interact with medical data, as well as how they interact with patients. Med-PaLM is highly accurate, providing a sophisticated level of precision and accuracy in its analysis of medical documents. With its advanced capabilities, Med-PaLM is set to become an invaluable tool to the medical industry.

Gopher by DeepMind is an impressive breakthrough in the field of Artificial Intelligence. It is a language model that consists of 280 billion parameters - an unprecedented number for this kind of technology. Gopher has been designed to allow machines to understand and process natural language with greater accuracy than ever before. By using Gopher, machines can now interpret complex concepts, construct sophisticated language and solve difficult problems more effectively. Gopher is a revolutionary step forward in the development of Artificial Intelligence technology.

Remove.bg

Remove Background from Image for Free – remove.bg

Voicemod

Free Real Time Voice Changer & Modulator - Voicemod

DALL·E By OpenAI

GPT-3 Model for Image Generation

InVideo

AI-Powered Video Creation

Speechify

Best Free Text To Speech Voice Reader | Speechify

AI Image Enlarger

AI Image Enlarger | Enlarge Image Without Losing Quality!

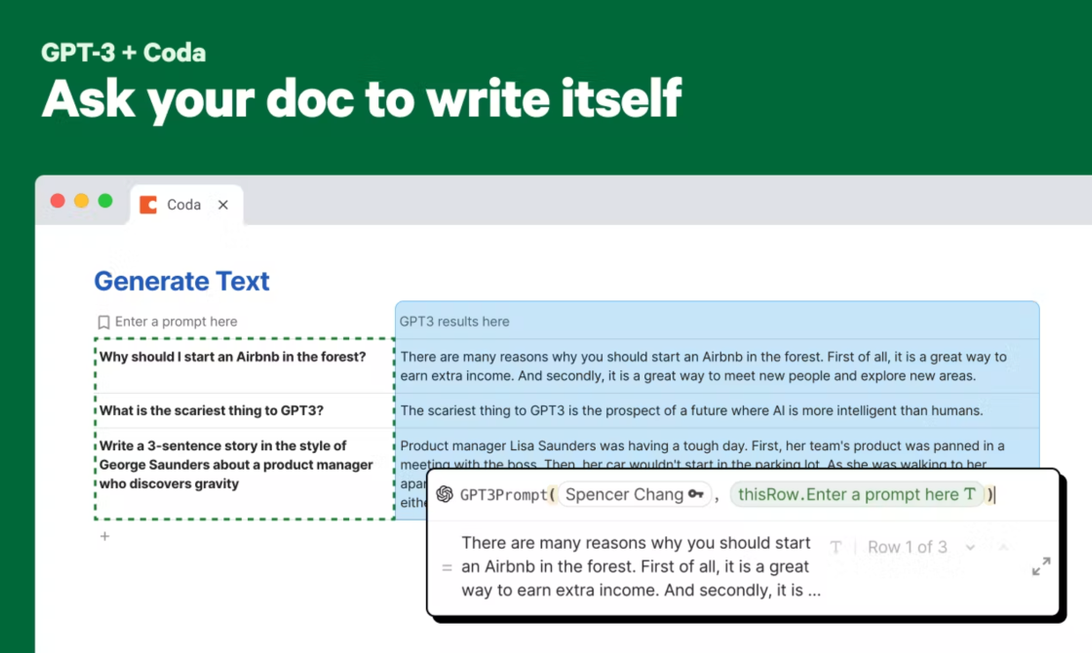

OpenAI For Coda

Automate hours of busywork in seconds with GPT-3 and DALL-E.

LALAL.AI

LALAL.AI: 100% AI-Powered Vocal and Instrumental Tracks Remover

GPT-2 (Generative Pre-trained Transformer 2) by OpenAI is a powerful language model that has revolutionized the field of natural language processing. It uses a deep neural network architecture to learn the structure and meaning of natural language, allowing it to generate coherent and meaningful sentences that capture the essence of a text. GPT-2 was trained on a massive corpus of web text and can generate coherent paragraphs from a few words of input. It is capable of predicting the next word in a sentence or the next sentence given a prompt. GPT-2 has been used for a variety of tasks, ranging from machine translation, question answering, summarization, and more. With its powerful capabilities, GPT-2 has the potential to revolutionize the way we interact with machines, enabling us to ask questions, share stories, and even write full essays. This introduction provides an overview of GPT-2 and its applications.

GPT-2 is a generative pre-trained transformer 2 developed by OpenAI, a research laboratory based in San Francisco. It is an open-source natural language processing (NLP) model which uses deep learning to produce human-like text.

GPT-2 can generate text that mimics human writing. It can be used to generate stories, articles, and other forms of natural language. It can also be used for question-answering and summarization tasks.

GPT-2 uses a deep neural network to process input text and generate output text. The model is pre-trained on a large corpus of text and then fine-tuned with additional data.

GPT-2 can be used for many applications in natural language processing, including text generation, summarization, question answering, and more.

GPT-2 has been shown to produce text that is very close to human-written text. It can be used to generate high-quality, coherent text.

Yes, GPT-2 is open source and available for anyone to use.

GPT-2 uses a combination of English and other languages. It is capable of understanding and producing text in a variety of languages.

Yes, GPT-2 is free to use.

The GPT-2 model is over 1.5 billion parameters, making it one of the largest models ever released.

GPT-2 can be run on both GPU and CPU. However, running the model on GPU is typically faster and more efficient.

| Competitor | Difference from GPT-2 |

|---|---|

| XLNet | Uses permutation language modeling instead of autoregressive language modeling |

| BERT | Learns bidirectional context, whereas GPT-2 learns unidirectional context |

| RoBERTa | Optimizes BERT for more performance by training on longer sequences and larger batches |

| CTRL | Allows the user to control generated text with special tokens |

| GPT-3 | Uses a much larger dataset and model size than GPT-2, resulting in improved accuracy and generality |

GPT-2 (Generative Pre-trained Transformer 2) by OpenAI is the latest in open-source natural language processing technology. GPT-2 is a deep learning model developed by OpenAI that is trained on a large dataset of web text. The model is capable of generating human-like text, with impressive results in a variety of natural language tasks such as summarization, question answering, and machine translation. GPT-2 has been used successfully to generate both short and long pieces of text, from single sentences to entire articles.

GPT-2 consists of a large neural network which is pre-trained on a vast corpus of text. This enables the model to capture patterns in the data which are not evident in smaller datasets. GPT-2 can then be fine-tuned for specific tasks, such as summarization or machine translation. It is also able to generate high-quality text when given a prompt.

GPT-2 has been shown to outperform other natural language models in many tasks. It has also been used to create powerful applications such as automated news writers and text summarizers. OpenAI has released several versions of GPT-2, ranging from small to large, so you can choose the size of the model that best fits your needs.

Overall, GPT-2 is an exciting development in the field of natural language processing and has the potential to revolutionize how we interact with machines. With its ability to generate text and perform tasks, it has great potential to improve the way humans interact with computers.

TOP