MuJoCo (Multi-Joint dynamics with Contact) is a popular physics engine that has garnered significant attention in recent years. Designed to enable detailed and efficient rigid body simulations with contacts, MuJoCo has become an essential tool for many researchers and developers involved in the development and evaluation of control algorithms. With its advanced features and reliable performance, MuJoCo has proven to be an invaluable asset for a wide range of applications across various industries. This article explores the various aspects of MuJoCo and its importance in the field of robotics and control systems.

Brain.ai is a cutting-edge natural language processing platform powered by artificial intelligence. It provides businesses and individuals with the ability to analyze and understand vast amounts of unstructured data in real-time. With its advanced algorithms, Brain.ai can identify patterns, extract insights, and generate accurate predictions, making it an essential tool for decision-making in various industries. Its user-friendly interface and customizable features make it easy to integrate into existing workflows, allowing users to harness the full potential of AI-powered data analysis.

BERT-base is an advanced AI language processing model that has revolutionized the field of natural language processing (NLP). The Bidirectional Encoder Representations from Transformers (BERT) technology enables complex tasks, such as question answering and language inference, to be performed with exceptional accuracy. This cutting-edge tool has transformed the way we analyze and understand human language, making it an indispensable asset for businesses, researchers, and developers alike.

Houndify is a revolutionary platform that leverages AI-based voice and natural language capabilities to provide organizations with the ability to create custom voice and natural language experiences. With its cutting-edge technology, Houndify offers a unique solution for businesses seeking to enhance their customer engagement and streamline their operations. This platform is designed to enable organizations to develop personalized and intuitive voice interfaces that can be integrated into a wide range of applications and devices, making it an ideal choice for companies looking to stay ahead in today's competitive marketplace.

Jitterbit is a robust software solution that has revolutionized how businesses automate processes, synchronize data between applications, and streamline their workflow. This API integration platform offers users an intuitive and user-friendly interface that simplifies the process of connecting different systems and applications, enabling companies to optimize their operations and stay ahead of the competition. With the ability to seamlessly integrate with a wide range of third-party applications, Jitterbit has become an essential tool for businesses looking to improve efficiency, reduce manual errors, and enhance productivity.

GraphLab Create is an advanced AI platform that has revolutionized the way machine learning practitioners create and deploy AI applications in production. It provides a powerful set of tools and algorithms that enable developers to build and optimize models efficiently. With GraphLab Create, developers can create scalable and high-performance applications for various domains, including finance, healthcare, e-commerce, and more. Its user-friendly interface and easy-to-use features make it a go-to solution for machine learning practitioners worldwide. This platform has transformed the way businesses operate by providing a comprehensive solution for creating and deploying AI applications.

CharacterAI

Personality Insights and Predictive Analytics

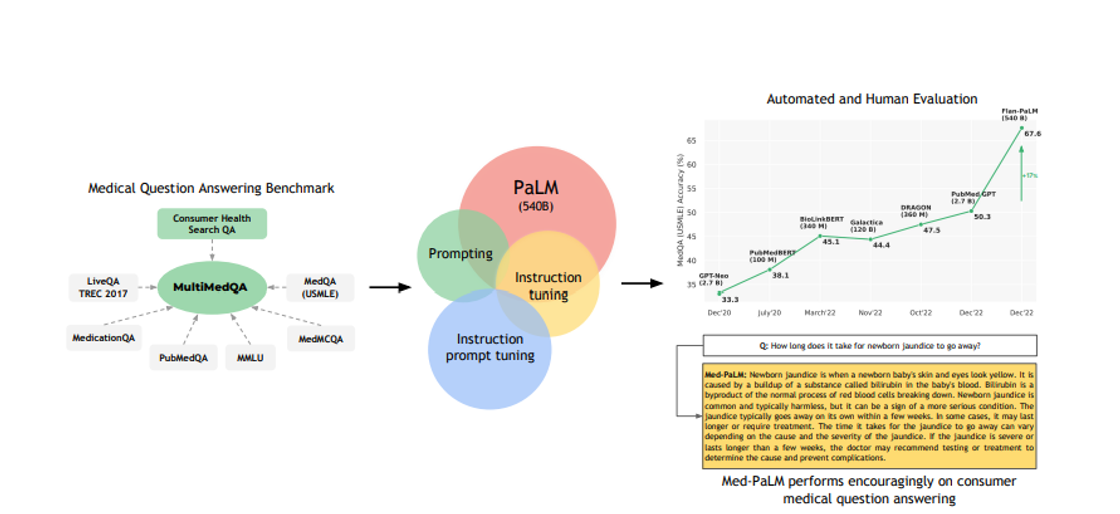

Med-PaLM

AI Powered Medical Imaging

YouChat

AI Chatbot Builder

Wolframalpha

Wolfram|Alpha: Computational Intelligence

Voicemod

Free Real Time Voice Changer & Modulator - Voicemod

Wordtune

Wordtune | Your personal writing assistant & editor

Caktus

AI solutions for students to write essays, discuss questions, general coding help and professional job application help.

Voice.ai

Custom Voice Solutions

Reinforcement learning has become an increasingly popular field of study in recent years, with applications ranging from robotics to game AI. However, conducting research in this area can be challenging, particularly when it comes to testing and implementing different algorithms. This is where Gym Retro comes in - a toolkit designed specifically for reinforcement learning research that provides compatibility with many popular algorithms and implements some of the most recent deep reinforcement learning techniques. With Gym Retro, researchers can easily test and compare different algorithms, allowing them to focus more on the core aspects of their research. Whether you're exploring new ways to improve game AI or developing cutting-edge robotics systems, Gym Retro offers a powerful set of tools that can help you achieve your goals quickly and efficiently. In this article, we'll take a closer look at what Gym Retro has to offer and how it can benefit researchers in the field of reinforcement learning.

Gym Retro is a toolkit designed for reinforcement learning research that is compatible with popular reinforcement learning algorithms and implements some of the latest deep reinforcement learning algorithms.

Gym Retro supports a wide range of reinforcement learning algorithms, including Q-learning, SARSA, DQN, A3C, and PPO.

Yes, Gym Retro is an open-source toolkit and can be accessed on GitHub.

Gym Retro allows you to simulate various classic video games, such as Super Mario Bros, Sonic the Hedgehog, and Atari games.

Yes, you can use Gym Retro to train your own reinforcement learning models or test existing models.

No, Gym Retro only supports unsupervised learning.

Gym Retro currently supports Python.

Gym Retro does not require any specific hardware or software requirements, but it is recommended to use a high-performance CPU or GPU for faster training.

Getting started with Gym Retro is straightforward and requires minimal setup. You can install the toolkit using pip and start training your models right away.

Yes, there is an active community forum for Gym Retro where you can ask questions, share ideas, and collaborate with other researchers.

| Competitor | Description | Difference |

|---|---|---|

| OpenAI Gym | A toolkit for developing and comparing reinforcement learning algorithms. | Gym Retro focuses on retro games while OpenAI Gym is more general |

| RLkit | A lightweight and easily extensible framework for creating and analyzing reinforcement learning algorithms. | RLkit focuses on model-based RL while Gym Retro focuses on implementing recent deep RL algorithms |

| Stable Baselines | A set of high-quality implementations of reinforcement learning algorithms in Python. | Stable Baselines has a larger range of algorithms implemented compared to Gym Retro |

| RLLib | An open-source library for reinforcement learning research that includes support for multi-agent systems and distributed environments. | RLLib focuses more on distributed environments while Gym Retro focuses on retro games |

Gym Retro is a toolkit that has gained popularity in the field of reinforcement learning research due to its compatibility with many popular reinforcement learning algorithms. It is also known for implementing some of the most recent deep reinforcement learning algorithms, making it a valuable tool for researchers and developers in this field.

One of the key features of Gym Retro is its ability to support a wide range of environments, which are essential for testing and evaluating reinforcement learning algorithms. These environments include classic games such as Super Mario Bros, Sonic the Hedgehog, and Street Fighter, among others.

Another advantage of Gym Retro is its ease of use, as it provides a simple and intuitive interface for researchers and developers to work with. This makes it an ideal tool for those who are new to reinforcement learning research, as well as those who are experienced and looking for a reliable and efficient tool.

Moreover, Gym Retro is open-source software, meaning that it is free for anyone to use and modify according to their needs. This makes it accessible to a wide range of users, from individual researchers to large organizations.

In conclusion, if you are interested in reinforcement learning research, Gym Retro is a valuable toolkit that you should consider using. It offers compatibility with popular algorithms, implements recent deep reinforcement learning algorithms, provides a wide range of environments, and is easy to use. Additionally, being open-source software, it is accessible to anyone and can be modified according to individual needs.

TOP