Keras is a widely used open source library that has been designed to simplify the process of building deep learning models. Written in Python, this library provides an easy-to-use interface for building neural networks and allows developers to prototype, test, and deploy their models quickly and efficiently. With its intuitive syntax and rich set of features, Keras has become a popular choice among machine learning practitioners and researchers alike. This introduction will delve deeper into the features and benefits of Keras, highlighting its importance in the field of deep learning.

DeepBrain Chain AI is an innovative platform that leverages blockchain technology to facilitate secure data exchange for decentralized artificial intelligence computing. This cutting-edge solution enables users to access advanced AI capabilities without compromising data privacy and security. With DeepBrain Chain AI, the potential for collaborative research and development in AI is limitless, as it provides a secure and efficient way to share and access data. This revolutionary approach to AI computing is set to transform the industry by providing a decentralized, cost-effective, and safer way to leverage the power of AI.

BigDL is an open-source distributed deep learning library that runs on Apache Spark. It provides a unified framework for building big data and artificial intelligence (AI) applications with high performance and scalability. BigDL offers a seamless integration with the Spark ecosystem, making it easy to build and deploy large-scale machine learning models. With its support for popular deep learning frameworks like TensorFlow and Keras, BigDL enables developers to easily leverage existing models and tools. This article explores the features and benefits of BigDL in detail and highlights its role in enabling efficient distributed deep learning on Spark clusters.

MXNet is an open-source deep learning library that is widely used by researchers and developers to create and deploy deep neural networks. With its flexibility, scalability, and performance, MXNet has become a popular choice for building cutting-edge machine learning models in a variety of industries. It offers a range of tools and features that enable users to tackle complex problems in natural language processing, computer vision, and other areas of artificial intelligence. This introduction will explore the key features and benefits of MXNet and highlight some of the ways in which it is being used to drive innovation in the field of deep learning.

PlaidML is a highly efficient deep learning engine that can operate on any hardware, making it an accessible and speedy choice for developers. This technology is perfect for individuals who are looking for an easy-to-use platform that can perform complex tasks efficiently. With PlaidML, developers can build and optimize machine learning models regardless of the hardware they have at their disposal. The flexibility and versatility of PlaidML make it a popular option for those seeking a reliable and powerful deep learning engine.

The use of AI technology in negotiations has become increasingly popular, as it can offer a more efficient and cost-effective way to reach agreements. Recently, the use of deepfake AI negotiation with DoNotPay and Wells Fargo has become more prevalent, leveraging GPT-J, Resemble.ai, and GPT-3 technologies. Deepfake AI negotiation promises a more efficient and accurate way to negotiate, while providing a platform for more transparent communication between parties. By utilizing these technologies, DoNotPay and Wells Fargo are able to leverage their AI capabilities to reach the best possible outcomes.

Canva Text-to-Image

AI-Generated Graphics

Grammarly

Grammarly: Free Online Writing Assistant

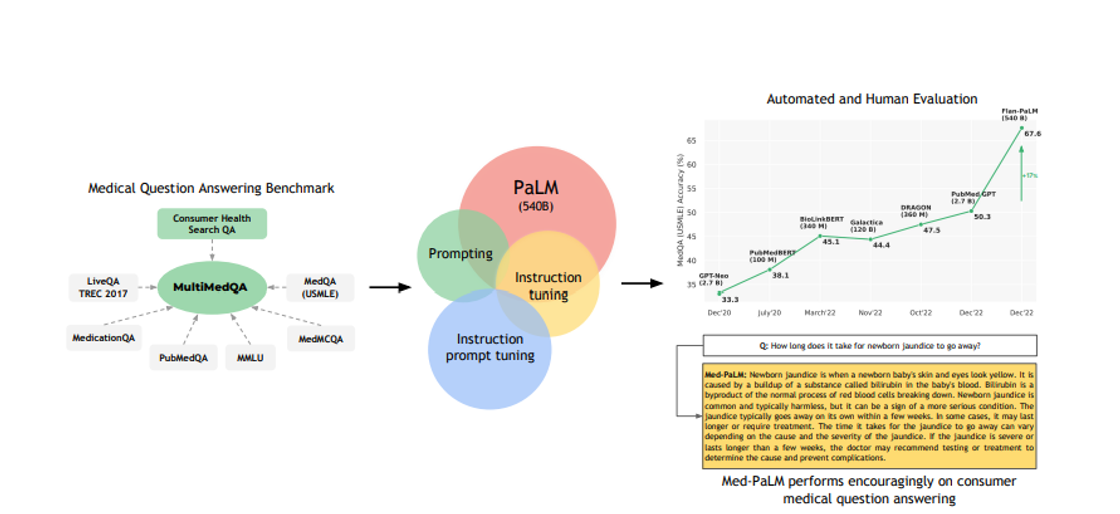

Med-PaLM

AI Powered Medical Imaging

Media.io

Media.io - Online Free Video Editor, Converter, Compressor

Lexica

The Stable Diffusion search engine.

Caktus

AI solutions for students to write essays, discuss questions, general coding help and professional job application help.

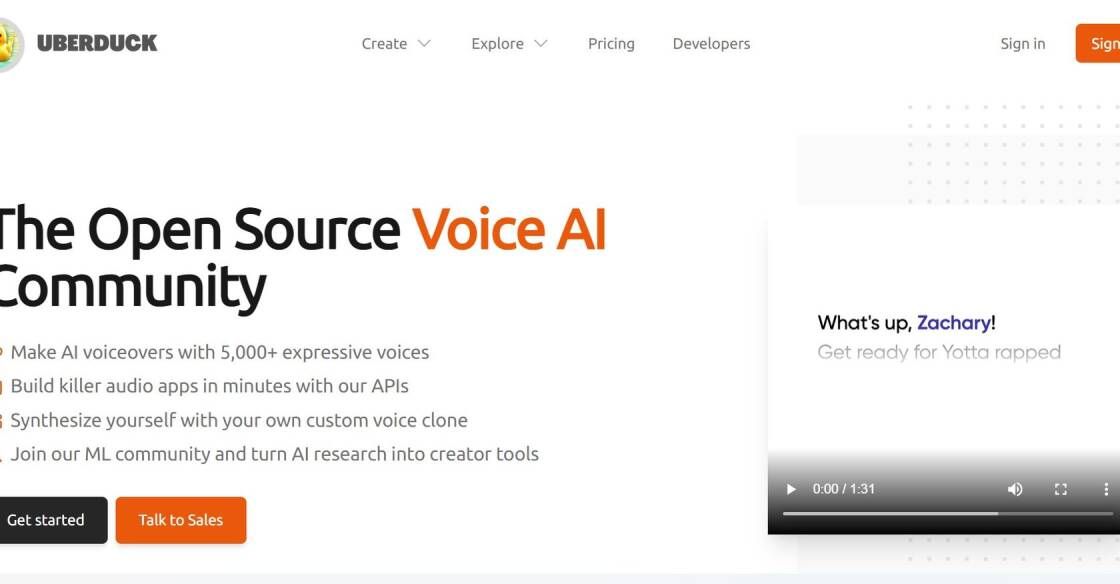

Uberduck

Uberduck | Text-to-speech, voice automation, synthetic media

AI Image Enlarger

AI Image Enlarger | Enlarge Image Without Losing Quality!

The NVIDIA Deep Learning Platform is a comprehensive suite of tools designed to accelerate deep learning application research and development. It is a powerful platform that enables researchers and developers to build, train, and deploy sophisticated neural networks quickly and efficiently. The platform offers a range of advanced features, including advanced algorithms, high-performance computing, and state-of-the-art hardware, that allow users to create complex models and achieve faster results. With the NVIDIA Deep Learning Platform, researchers and developers can tackle a wide range of applications, from image recognition and natural language processing to autonomous driving and robotics. The platform also provides access to a vast community of experts who can offer support and advice on best practices for developing and deploying deep learning applications. This introduction will explore the key features and benefits of the NVIDIA Deep Learning Platform and how it can help researchers and developers unlock new possibilities in deep learning.

The NVIDIA Deep Learning Platform is a suite of tools designed to accelerate research and development in deep learning applications.

Some of the tools included in the NVIDIA Deep Learning Platform are TensorRT, cuDNN, CUDA Toolkit, and DIGITS.

TensorRT is a high-performance deep learning inference optimizer and runtime engine designed to accelerate deep learning applications on NVIDIA GPUs.

cuDNN is a GPU-accelerated library of primitives for deep neural networks that provides optimized implementations of key building blocks for deep learning algorithms.

The CUDA Toolkit is a comprehensive development environment for building GPU-accelerated applications that includes a compiler, libraries, and tools.

DIGITS is a web-based tool for training deep neural networks that simplifies common deep learning tasks such as managing data, designing and training models, and monitoring performance.

The NVIDIA Deep Learning Platform offers faster model training, increased accuracy, and reduced time-to-insight, allowing developers to iterate more quickly and efficiently.

The NVIDIA Deep Learning Platform can benefit a wide range of industries, including healthcare, finance, automotive, and gaming.

Yes, the NVIDIA Deep Learning Platform is designed to be easy to use, with intuitive interfaces and comprehensive documentation to help developers get started quickly.

Yes, the NVIDIA Deep Learning Platform is compatible with a variety of popular deep learning frameworks and tools, including TensorFlow, PyTorch, and Caffe.

| Competitor | Description | Key Features |

|---|---|---|

| Google TensorFlow | Open-source software library for dataflow and differentiable programming across a range of tasks | Distributed training, GPU acceleration, support for multiple languages |

| Microsoft Cognitive Toolkit | Free, easy-to-use, open-source, commercial-grade toolkit that trains deep learning algorithms to learn like the human brain | Distributed training, GPU acceleration, cognitive services integration |

| Amazon Web Services (AWS) Machine Learning | Cloud-powered platform that offers a broad set of machine learning services and APIs | Automated model building, real-time streaming, integration with other AWS services |

| IBM Watson Studio | AI-powered platform that allows data scientists to prepare data, build models, and deploy solutions at scale | AutoAI, visual modeling, collaboration tools |

NVIDIA Deep Learning Platform is a comprehensive suite of tools that accelerates deep learning application research and development. It provides an end-to-end platform for building and deploying deep learning models, from data preparation to model training and inference.

The NVIDIA Deep Learning Platform consists of several components, including the NVIDIA CUDA Toolkit, cuDNN library, TensorRT, and DeepStream SDK. These tools work together to provide a seamless and efficient workflow for deep learning development.

The CUDA Toolkit is a software development kit that provides a programming interface for GPUs. It includes a compiler, libraries, and development tools that enable developers to write high-performance code for NVIDIA GPUs. The cuDNN library provides optimized primitives for deep learning operations, making it easier to develop and train complex models.

TensorRT is a high-performance deep learning inference engine that optimizes trained models for deployment on NVIDIA GPUs. It provides fast and efficient inference performance, enabling real-time applications in industries such as autonomous vehicles, robotics, and healthcare.

The DeepStream SDK is a streaming analytics toolkit that enables developers to build intelligent video analytics applications. It provides a framework for processing and analyzing video streams in real-time, using deep learning models to detect and recognize objects and events.

Overall, the NVIDIA Deep Learning Platform provides a powerful set of tools and frameworks for accelerating deep learning research and development. It empowers researchers and developers to build and deploy cutting-edge deep learning models in a fast and efficient manner, enabling breakthroughs in fields such as computer vision, natural language processing, and robotics.

TOP