The Horizon AI Template empowers developers to effortlessly construct exceptional AI SaaS applications and accelerates the process by tenfold. With this innovative solution, users can harness the potential of artificial intelligence without compromising on quality or efficiency. By eliminating the need to start from scratch, the Horizon AI Template provides a solid foundation for crafting cutting-edge applications that meet the demands of today's fast-paced digital landscape. This tool unlocks boundless possibilities, allowing developers to create remarkable AI-powered software swiftly and seamlessly. Experience the future of AI development with the Horizon AI Template and witness the dawn of a new era in application building.

Pragma is an innovative knowledge assistant designed to streamline and enhance your company's workflow. From providing quick and easy access to critical information to analyzing data to make informed decisions, Pragma has the tools your business needs to succeed. This powerful software offers a range of features that can be customized to fit your company's unique needs, making it an indispensable tool for any organization. With Pragma on your side, you'll have access to the information and insights you need to stay ahead of the competition and achieve your goals.

QuantPlus is a cutting-edge AI engine that enables businesses to transform their performance data into actionable insights for creating effective ads. With its advanced analytical capabilities, QuantPlus helps marketers and advertisers to optimize their advertising strategies and achieve impressive results. This powerful tool is designed to provide valuable insights into customer behavior, demographics, and preferences, enabling companies to create targeted campaigns that resonate with their audience and deliver measurable results. Whether you are looking to increase brand awareness, drive website traffic, or boost sales, QuantPlus offers the data-driven insights and intelligence you need to succeed in today's competitive market.

"Looking to improve your pitch and secure funding for your startup? Look no further than Fry My Deck - the innovative platform designed to help entrepreneurs train their pitch with questions from some of Silicon Valley's best investors and venture capitalists. Tap into the insights of industry leaders and refine your pitch for ultimate success. Whether you're just starting out or ready to take your business to the next level, Fry My Deck has the tools you need to succeed in the highly competitive world of startups."

LemonRecruiter is an innovative platform that promises to make recruitment and LinkedIn outreach much easier for businesses. With its user-friendly interface, LemonRecruiter allows companies to easily source and connect with potential candidates on LinkedIn. Additionally, its comprehensive tools streamline the entire recruitment process, from job postings and applicant tracking to interview scheduling and feedback management. With LemonRecruiter, businesses can save time, money, and resources while ensuring they find the best-fit candidates for their open positions.

The Intel OpenVINO Toolkit is an advanced framework that enables developers to create high-performance computer vision applications. This toolkit has been designed to optimize performance on Intel CPUs, GPUs, and FPGAs, making it an ideal choice for a range of applications, including robotics, surveillance, and smart cameras. With its comprehensive set of pre-trained models and libraries, developers can quickly build and deploy machine learning models on a variety of edge devices. The OpenVINO Toolkit provides a powerful set of tools that enable developers to accelerate their development process and deliver highly accurate and efficient computer vision applications.

Canva Text-to-Image

AI-Generated Graphics

Notion AI

Leverage the limitless power of AI in any Notion page. Write faster, think bigger, and augment creativity. Like magic!

Writesonic

Writesonic - Best AI Writer, Copywriting & Paraphrasing Tool

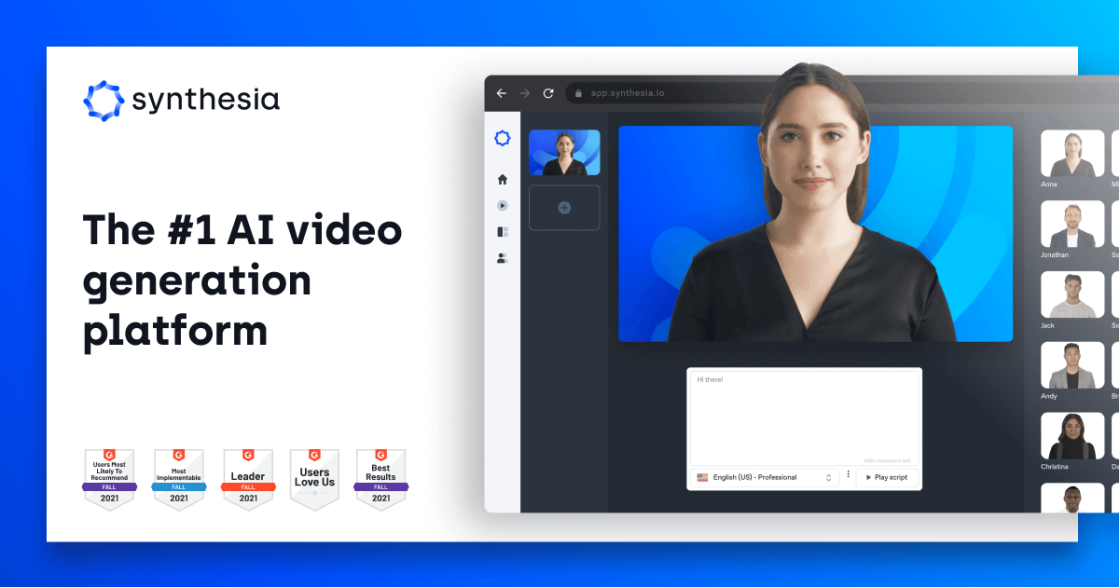

Synthesia

Synthesia | #1 AI Video Generation Platform

GPT For Sheets

GPT for Sheets™ and Docs™ - Google Workspace Marketplace

WatermarkRemover.io

Watermark Remover - Remove Watermarks Online from Images for Free

Make (fomerly Known As Integromat)

Automation Platform

Erase.bg

Free Background Image Remover: Remove BG from HD Images Online - Erase.bg

NVIDIA TensorRT is a software library that is designed for optimizing deep learning models. It is built to take advantage of NVIDIA GPUs and provides a high-performance platform for deploying deep learning models in production environments. The tool optimizes deep learning models by fusing layers, quantizing weights, and pruning unnecessary nodes. This results in faster inference times and reduced memory usage.

TensorRT supports several popular deep learning frameworks such as TensorFlow, PyTorch, and Caffe. With its support for INT8 and FP16 precision modes, TensorRT allows developers to achieve high accuracy while reducing memory usage and increasing throughput.

The library can be used for a variety of applications, including image classification, object detection, natural language processing, and speech recognition. It also includes a comprehensive set of APIs and tools for monitoring and debugging deep learning models.

Overall, NVIDIA TensorRT is a powerful and efficient tool for optimizing deep learning models and deploying them in production environments. Its ability to leverage the power of NVIDIA GPUs makes it an ideal choice for high-performance computing applications that require fast and accurate inference.

NVIDIA TensorRT is a high-performance deep learning inference optimizer and runtime library that can optimize and execute models on NVIDIA GPUs with low latency.

NVIDIA TensorRT can provide significant performance improvements, reduce memory usage, and improve energy efficiency for deep learning inference workloads.

NVIDIA TensorRT supports popular deep learning frameworks such as TensorFlow, PyTorch, and ONNX.

Yes, NVIDIA TensorRT is optimized for low-latency, real-time processing and can deliver fast inference performance on NVIDIA GPUs.

Yes, NVIDIA TensorRT can scale across multiple GPUs to improve inference throughput and reduce latency.

NVIDIA CUDA is a general-purpose parallel computing platform and programming model, while NVIDIA TensorRT is a deep learning inference optimizer and runtime library built on top of CUDA.

Yes, NVIDIA TensorRT supports NLP models and has been used to accelerate inference for applications such as machine translation and sentiment analysis.

No, NVIDIA TensorRT is a proprietary software library developed by NVIDIA Corporation.

NVIDIA TensorRT requires an NVIDIA GPU with compute capability 6.0 or higher and a compatible driver.

No, NVIDIA TensorRT is designed specifically for deep learning inference and cannot be used for training models.

| Competitor | Key Features | Difference from NVIDIA TensorRT |

|---|---|---|

| Intel OpenVINO | Supports multiple frameworks including TensorFlow, Caffe, MXNet, etc. | Primarily optimized for Intel hardware and may not perform as well on non-Intel systems |

| Google TensorFlow Lite | Designed for running machine learning models on mobile and IoT devices | Limited support for certain types of models and may not be as powerful as NVIDIA TensorRT |

| Microsoft ONNX Runtime | Supports multiple frameworks and provides high performance across hardware types | Not as widely adopted as NVIDIA TensorRT and may have limited community support |

| Amazon AWS Inferentia | Designed for running machine learning models on AWS cloud infrastructure | Limited to use within the AWS ecosystem and may not be as flexible as NVIDIA TensorRT |

| Qualcomm Snapdragon Neural Processing Engine | Designed for running machine learning models on mobile devices with Qualcomm Snapdragon processors | Limited to use on specific mobile devices and may not be as powerful as NVIDIA TensorRT on other hardware types |

NVIDIA TensorRT is a high-performance deep learning inference optimizer and runtime. It is designed to optimize and accelerate the inference of deep neural networks (DNNs) on NVIDIA GPUs. TensorRT can significantly improve the performance of DNNs, reduce their memory footprint, and lower their latency.

Here are some things you should know about NVIDIA TensorRT:

1. TensorRT supports several popular deep learning frameworks, such as TensorFlow, PyTorch, and Caffe. This enables developers to easily integrate TensorRT into their existing deep learning workflows.

2. TensorRT uses advanced optimization techniques to reduce the computational complexity of DNNs. It does this by fusing multiple layers of a network into a single operation, eliminating unnecessary computations, and quantizing the weights and activations of the network.

3. TensorRT can run DNNs with mixed-precision calculations, which means it can use both 16-bit and 32-bit floating-point numbers to perform computations. This can significantly reduce memory usage and increase throughput.

4. TensorRT also supports dynamic tensor shapes, which allows for more efficient memory usage and reduces the need for padding operations.

5. TensorRT includes a set of pre-trained models that can be used for common deep learning tasks, such as image classification and object detection. These models can be fine-tuned for specific applications or used as a starting point for custom models.

6. TensorRT supports both batched and streaming inference, which allows for high-throughput and low-latency applications. Batched inference can process multiple input samples in parallel, while streaming inference can process input samples as they become available.

7. TensorRT can be deployed on a range of NVIDIA GPUs, including Jetson devices for edge computing and data center GPUs for high-performance computing.

In conclusion, NVIDIA TensorRT is a powerful tool for optimizing and accelerating the inference of deep neural networks. Its advanced optimization techniques, mixed-precision calculations, and support for multiple deep learning frameworks make it a popular choice among developers. By using TensorRT, developers can improve the performance and efficiency of their deep learning applications, and deliver real-time AI solutions at scale.

TOP