By scanning your package.json or github repo and comparing with our database of tools categorized by their functions, we let you know what are the important tooling you are missing in your projects. And you can compare it with against other projects.

In today's digital world, the ability to convert files from one format to another has become an essential task. One such conversion that is often required is from CSV to JSON format. Convert CSV is a free online tool that offers a simple and efficient way to convert CSV files to other formats, including JSON. With its user-friendly interface and fast processing speed, Convert CSV has become a popular choice for businesses and individuals alike who need to convert their data quickly and easily without the hassle of installing any software.

Scale AI is a leading provider of advanced automation tools that enable businesses to build custom deep learning models from scratch. Their AI-powered platform offers a range of features that make it easy for users to create powerful models that can be used to drive innovation and growth in their organizations. With its advanced automation capabilities, Scale AI empowers businesses to leverage the power of artificial intelligence in new and exciting ways, helping them to stay ahead of the competition and achieve their goals. Whether you are a small startup or a large enterprise, Scale AI has the tools and expertise you need to succeed in today's fast-paced digital landscape.

Nara Logics is an innovative AI platform that is transforming the way businesses interpret and harness complex data. By providing cutting-edge technology and advanced algorithms, Nara Logics empowers businesses to make informed decisions faster and more efficiently than ever before. With a focus on enhancing business intelligence, this platform allows organizations to accurately predict consumer behavior and optimize their strategies accordingly. In today's rapidly evolving digital landscape, Nara Logics is revolutionizing the way businesses operate and stay ahead of the competition.

Hexometer is a powerful AI tool that provides round-the-clock protection and growth to eCommerce businesses by keeping a close watch on their websites and vital services. It monitors various aspects of a website, including uptime, performance, user experience, broken pages, errors, SEO, and config issues. With its continuous monitoring, Hexometer ensures that the website remains healthy, secure, and optimized for search engines. It notifies the business owners via multiple channels, such as email, SMS, Slack, Telegram, and Trello, whenever it detects any issues, thus enabling them to take prompt action and prevent any damage to their online presence.

EZQL - Outerbase is a revolutionary database interface that offers users with an easy and intuitive way to explore and collaborate on data without any need for SQL coding. With its user-friendly interface, EZQL - Outerbase enables users to access powerful features such as in-line editing, blocks of SQL queries, and the ability to share queries with team members. This comprehensive database interface is designed to simplify the process of working with complex data, making it an ideal solution for businesses and organizations looking to streamline their operations.

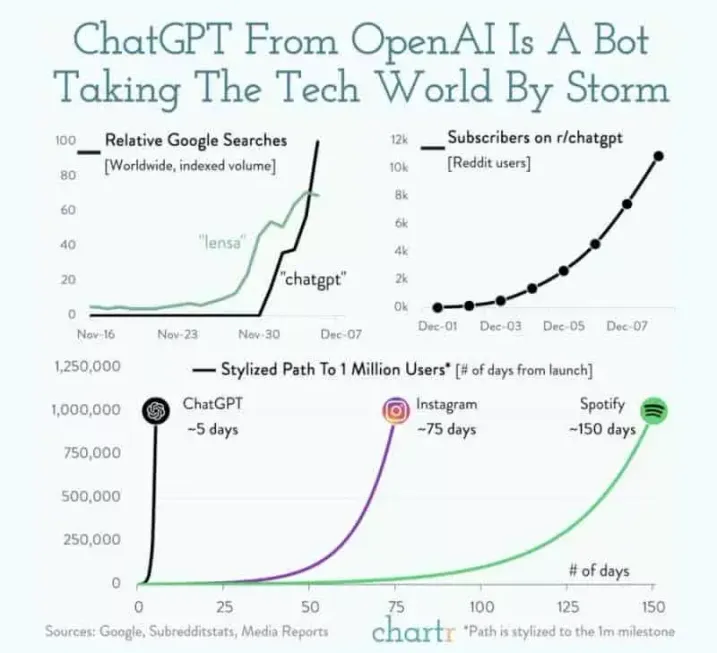

ChatGPT Plus

Introducing ChatGPT

Notion AI

Leverage the limitless power of AI in any Notion page. Write faster, think bigger, and augment creativity. Like magic!

Namecheap Logo Maker

AI Powered Logo Creation

Talk To Books

A new way to explore ideas and discover books. Make a statement or ask a question to browse passages from books using experimental AI.

AI Content Detector

AI Content Detector | GPT-3 | ChatGPT - Writer

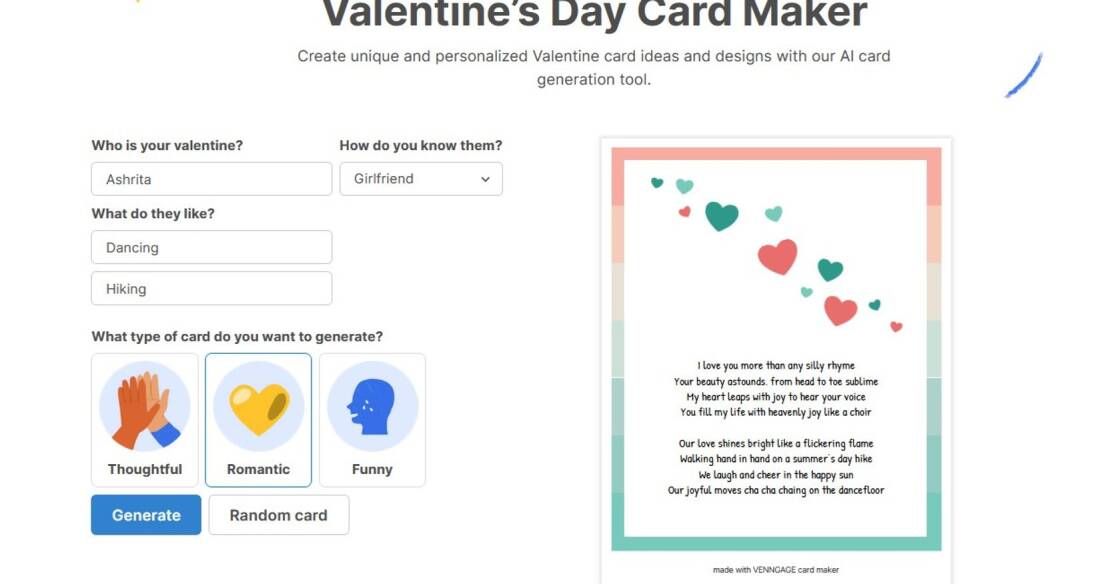

Venngage

Valentine’s Day Card Maker

Topaz Video AI

Unlimited access to the world’s leading production-grade neural networks for video upscaling, deinterlacing, motion interpolation, and shake stabilization - all optimized for your local workstation.

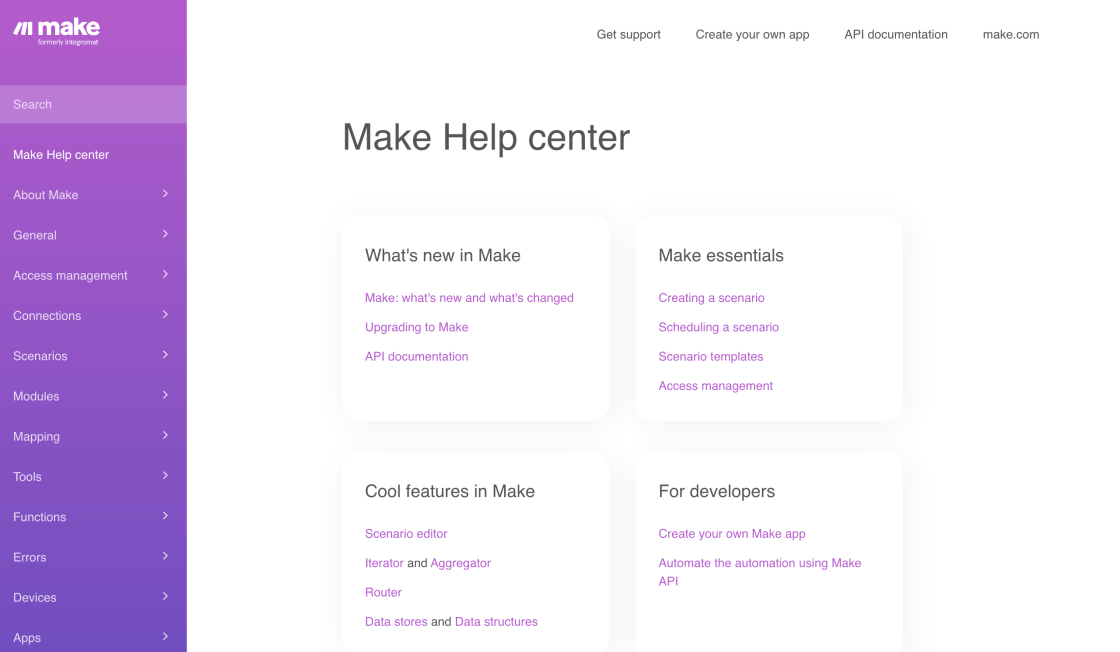

Make (fomerly Known As Integromat)

Automation Platform

Scrapy is a powerful and widely used web crawling framework that enables users to extract data from websites with ease. It is an open source and collaborative tool that has gained immense popularity in the web scraping arena due to its numerous features and functionalities. Scrapy provides a fast and efficient way of collecting data from websites, making it a preferred choice for developers, researchers, and businesses alike. With Scrapy, users can easily navigate through websites, extract data, and store it in various formats. The framework's flexibility and scalability make it an ideal solution for data mining and web scraping tasks. Scrapy is written in Python, a high-level programming language that is easy to learn and widely used in data science and web development. In this article, we will explore the features and benefits of Scrapy and how it can be used to extract data from websites effectively.

Scrapy is an open-source web crawling framework that is used to extract data from websites.

Scrapy is written in Python and uses XPath and CSS selectors for data extraction.

Yes, Scrapy can be used for both small and large-scale web scraping projects.

While Scrapy may be a bit challenging for beginners, there are many resources available online to help users get started with the framework.

Scrapy can extract a wide range of data, including text, images, and even structured data like JSON and XML.

Scrapy is unique in that it is a complete framework for building web crawlers, whereas other tools may only provide partial functionality.

Yes, Scrapy provides support for handling authentication and cookies during web scraping.

Yes, Scrapy supports parallel processing, which allows for faster and more efficient web scraping.

Yes, Scrapy is compatible with Windows, macOS, and Linux operating systems.

While Scrapy is a powerful tool for web scraping, it may not be suitable for all types of web scraping projects, particularly those that require more advanced techniques like JavaScript rendering.

| Competitor | Description | Differences from Scrapy |

|---|---|---|

| Beautiful Soup | A Python library designed for web scraping HTML and XML files. | Does not have built-in support for downloading or handling HTTP requests. |

| Selenium | A suite of tools to automate web browsers and perform web testing. | Primarily used for testing rather than web scraping. Slower than Scrapy due to the use of a browser. |

| Puppeteer | A Node.js library which provides a high-level API to control headless Chrome or Chromium over the DevTools Protocol. | Primarily used for testing rather than web scraping. Works with JavaScript applications better than Scrapy. |

| Requests-HTML | An HTML parsing library that is powered by Requests and BeautifulSoup. | Less powerful and flexible than Scrapy, but easier to use for simple web scraping tasks. |

| ParseHub | A visual web scraping tool that allows you to extract data from dynamic websites. | Limited customization compared to Scrapy. Not open source. |

Scrapy is a powerful web crawling framework that allows you to extract data from websites with ease. It is an open-source and collaborative tool that can be used by developers, data scientists, and researchers to scrape information from multiple sources.

Here are some key things you should know about Scrapy:

1. Scrapy is built on Python: Scrapy is written in Python, one of the most popular programming languages in the world. Python's simplicity and flexibility make it an ideal choice for developing web crawlers.

2. Scrapy is scalable: Scrapy is designed to handle large-scale scraping projects. It can handle millions of URLs and has built-in support for distributed crawling.

3. Scrapy is customizable: Scrapy is highly customizable and can be tailored to your specific needs. You can define your own spiders, pipelines, and middleware to extract and process data in any way you want.

4. Scrapy is easy to use: Scrapy is easy to install and comes with comprehensive documentation. The framework is designed to be user-friendly and intuitive, even for beginners.

5. Scrapy is fast: Scrapy is optimized for speed and performance. It uses asynchronous networking and parallel processing to crawl websites quickly and efficiently.

6. Scrapy supports multiple data formats: Scrapy can extract data from websites in various formats, including HTML, XML, JSON, and CSV. It also supports authentication, cookies, and sessions.

7. Scrapy has a vibrant community: Scrapy has a large and active community of developers who contribute to the project and provide support to other users. You can find tutorials, forums, and other resources online to help you get started with Scrapy.

In conclusion, Scrapy is a powerful and versatile web crawling framework that can help you extract data from websites quickly and efficiently. Whether you are a developer, data scientist, or researcher, Scrapy can be a valuable tool in your toolkit.

TOP