Dopamine is a powerful research framework that allows researchers to rapidly prototype reinforcement learning algorithms. It provides a streamlined environment for conducting experiments, enabling the comparison of results across different algorithms and parameterizations. With Dopamine, researchers can easily prepare figures for papers and presentations, making it an invaluable tool for those working in the field of machine learning. This introduction will explore the benefits of Dopamine and its impact on the advancement of reinforcement learning algorithms.

Rasa NLU is a powerful open source library designed to facilitate natural language understanding. It allows users to classify intent and extract entities, making it a valuable tool for building conversational AI applications. With its flexible architecture and advanced features, Rasa NLU is rapidly becoming one of the most popular libraries for natural language processing, providing developers with a scalable and customizable solution for their projects.

TIBCO Machine Learning is a powerful platform that facilitates the deployment of machine learning and AI models. It provides an intuitive interface for data scientists to build, train, test, and deploy their models quickly and efficiently. With TIBCO Machine Learning, organizations can easily leverage the power of AI to gain insights and make informed decisions. This platform is designed to support a wide range of use cases, from predictive maintenance to fraud detection and customer segmentation. By offering fast and easy deployment of machine learning models, TIBCO Machine Learning enables businesses to stay competitive in today's rapidly evolving digital landscape.

Splice Machine is a cutting-edge technology that has brought a revolution in the world of real-time databases. This innovative platform integrates artificial intelligence (AI) capabilities, making it more advanced and efficient than traditional databases. With Splice Machine, businesses can leverage real-time data to make informed decisions, improve customer experience and optimize their operations. Its intuitive interface and powerful features have made it a popular choice among organizations that require high-performance data management systems. This paper will explore the unique features of Splice Machine and how it is transforming the way we handle data.

Scikit-learn is an open source Python library that has emerged as a simple and efficient tool for data mining and data analysis. This library provides various machine learning algorithms and tools to perform predictive modeling, classification, regression, clustering, and more. With its easy-to-use interface and rich set of functionalities, Scikit-learn has become one of the most popular libraries among data scientists and machine learning practitioners. Its versatility, performance, and reliability make it an essential tool for data analysis and exploration in various industries, from finance and healthcare to marketing and e-commerce.

Microsoft ML Studio is a cloud-based artificial intelligence platform that provides developers and data scientists with the ability to build, deploy, and manage apps. With its user-friendly interface, this platform offers a wide range of advanced tools and features that enable users to create custom machine learning models and algorithms, integrate with other Microsoft services, and analyze data using powerful visualization tools. Designed to simplify the process of developing AI-powered applications, Microsoft ML Studio offers a scalable and flexible solution that can be customized to meet the specific needs of any organization.

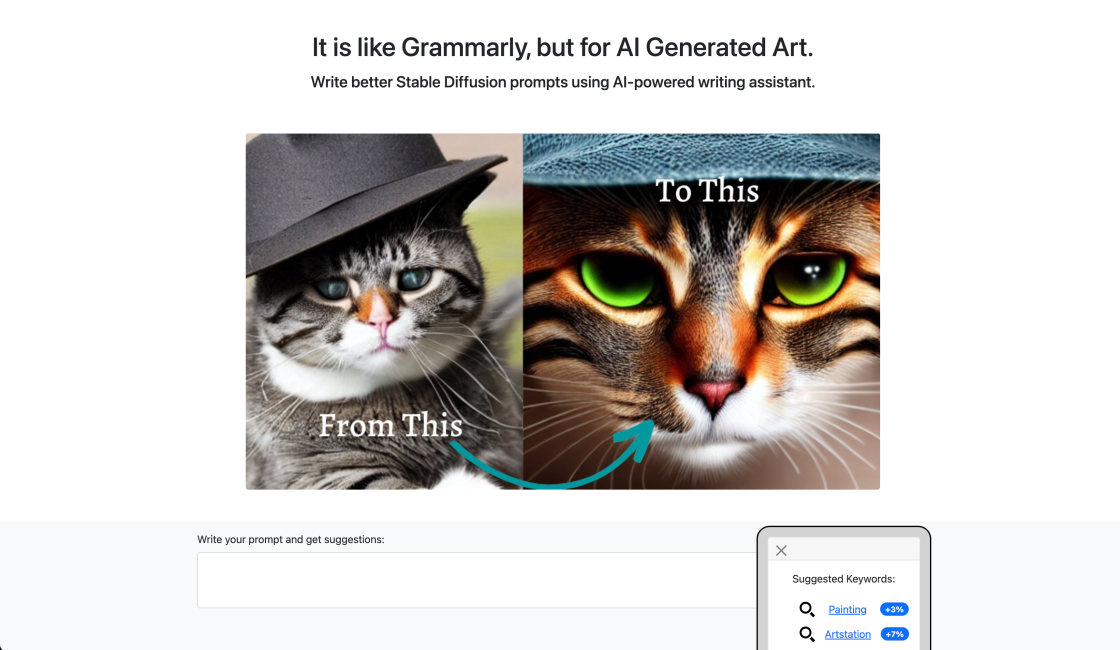

Write Stable Diffusion Prompts

How to Write an Awesome Stable Diffusion Prompt

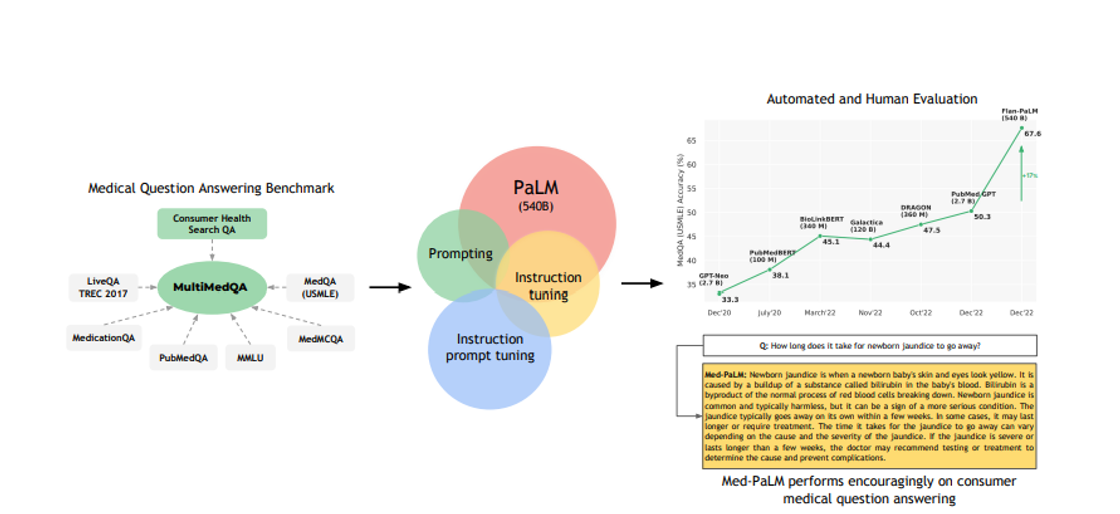

Med-PaLM

AI Powered Medical Imaging

Zapier

OpenAI (Makers of ChatGPT) Integrations | Connect Your Apps with Zapier

GPT-3 Alzheimer

Predicting dementia from spontaneous speech using large language models | PLOS Digital Health

Civitai

Creating Intelligent and Adaptive AI

WatermarkRemover.io

Watermark Remover - Remove Watermarks Online from Images for Free

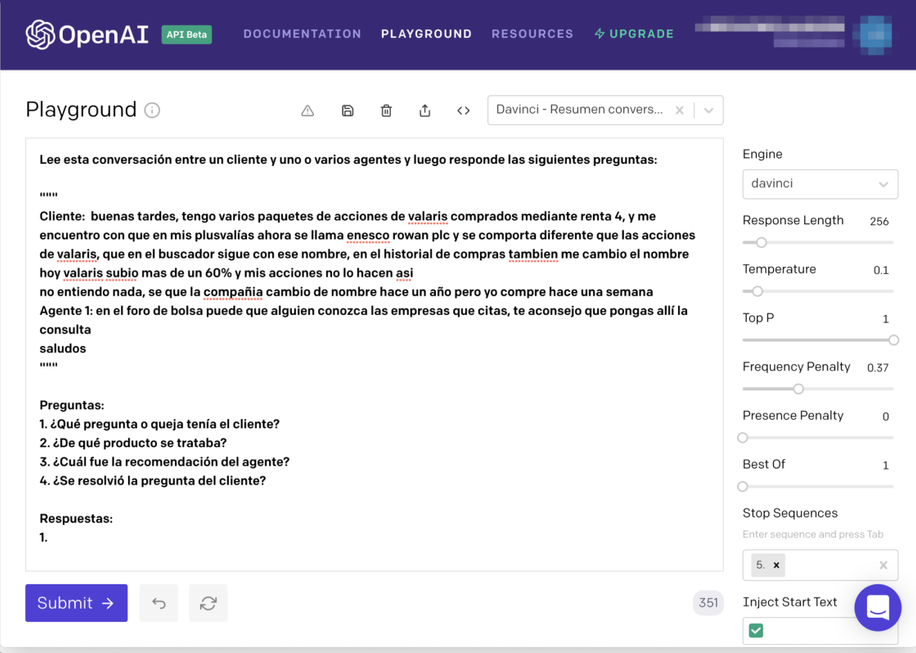

Spanish-speaking Banking Agent

Can GPT-3 help during conversations with our Spanish-speaking customers?

Voice-AI

Voice Analysis and Optimization

Language processing models have become an essential tool in natural language processing (NLP) research and applications. XLM-RoBERTa is a state-of-the-art AI language processing model that is based on the RoBERTa architecture and built using the XLM algorithm. The XLM-RoBERTa model has demonstrated impressive performance in various multilingual NLP tasks, including language understanding, machine translation, and text classification. This model is designed to overcome the limitations of traditional language processing models, which are often restricted to a single language or a small set of languages. The XLM-RoBERTa model is trained on vast amounts of data from multiple languages, making it highly adaptable to new languages and more effective at cross-lingual tasks. The XLM-RoBERTa model has been widely adopted by researchers and practitioners in the NLP community, and its success has opened up new possibilities for multilingual NLP applications. In this article, we will explore the key features and benefits of the XLM-RoBERTa model and its impact on the field of NLP.

XLM-RoBERTa is an AI language processing model based on the RoBERTa architecture that uses the XLM algorithm for cross-lingual language modeling.

XLM-RoBERTa works by training on a large corpus of text data from multiple languages, which enables it to understand and process text in various languages.

The RoBERTa architecture is a neural network architecture that is designed to perform natural language processing tasks such as language modeling, classification, and text generation.

The XLM algorithm is a cross-lingual language modeling algorithm that enables models to understand and process text in multiple languages.

XLM-RoBERTa can process text in multiple languages, which makes it useful for applications such as machine translation, sentiment analysis, and information retrieval.

XLM-RoBERTa is different from other language processing models because it uses the XLM algorithm, which enables it to process text in multiple languages.

The training process for XLM-RoBERTa involves feeding large amounts of text data from multiple languages into the model and adjusting the weights of the neural network so that it can accurately predict the next word in a sentence.

XLM-RoBERTa can be used for a variety of applications, including machine translation, text classification, sentiment analysis, and information retrieval.

Yes, XLM-RoBERTa is an open-source project that is freely available to developers and researchers.

Yes, XLM-RoBERTa can be fine-tuned for specific tasks such as sentiment analysis or machine translation by training it on a smaller dataset that is specific to the task at hand.

| Competitor | Description | Difference |

|---|---|---|

| BERT | BERT is another popular AI language processing model based on the transformer architecture. Unlike XLM-RoBERTa, BERT is monolingual and trained on English language corpora. | XLM-RoBERTa is a cross-lingual model that can understand multiple languages whereas BERT is limited to English. |

| GPT-3 | GPT-3 is a state-of-the-art AI language processing model developed by OpenAI. It uses a transformer-based architecture similar to XLM-RoBERTa, but it is not cross-lingual. | XLM-RoBERTa can understand multiple languages while GPT-3 is limited to English. |

| T5 | T5 is a transformer-based AI language processing model developed by Google. It is designed to perform various natural language tasks like translation, summarization, and question-answering. Unlike XLM-RoBERTa, T5 is not cross-lingual. | XLM-RoBERTa is capable of understanding multiple languages whereas T5 is limited to specific language tasks. |

| ALBERT | ALBERT (A Lite BERT) is a modified version of BERT that is designed to be more efficient and have fewer parameters. It is also a monolingual model like BERT. | XLM-RoBERTa is a cross-lingual model that can understand multiple languages while ALBERT is limited to English. |

XLM-RoBERTa is an advanced artificial intelligence language processing model that utilizes the RoBERTa architecture. It is a cross-lingual language model that is based on the XLM algorithm, which allows it to process data from different languages.

The XLM-RoBERTa model is designed to understand and interpret natural language text in a variety of languages. It has been trained on a large corpus of text, allowing it to accurately predict the meaning of words and phrases in context.

One of the key advantages of XLM-RoBERTa is its ability to process multiple languages simultaneously. This means that it can analyze text from different sources, including social media, news articles, and academic research papers, without requiring separate models for each language.

The XLM-RoBERTa model has been used in a wide range of applications, including machine translation, sentiment analysis, and text classification. It has also been used to improve the accuracy of voice recognition systems and chatbots.

Overall, XLM-RoBERTa is a powerful tool for natural language processing that offers significant advantages over traditional language models. Its ability to process multiple languages and accurately predict the meaning of words and phrases in context makes it a valuable asset for businesses and organizations operating in a global marketplace.

TOP